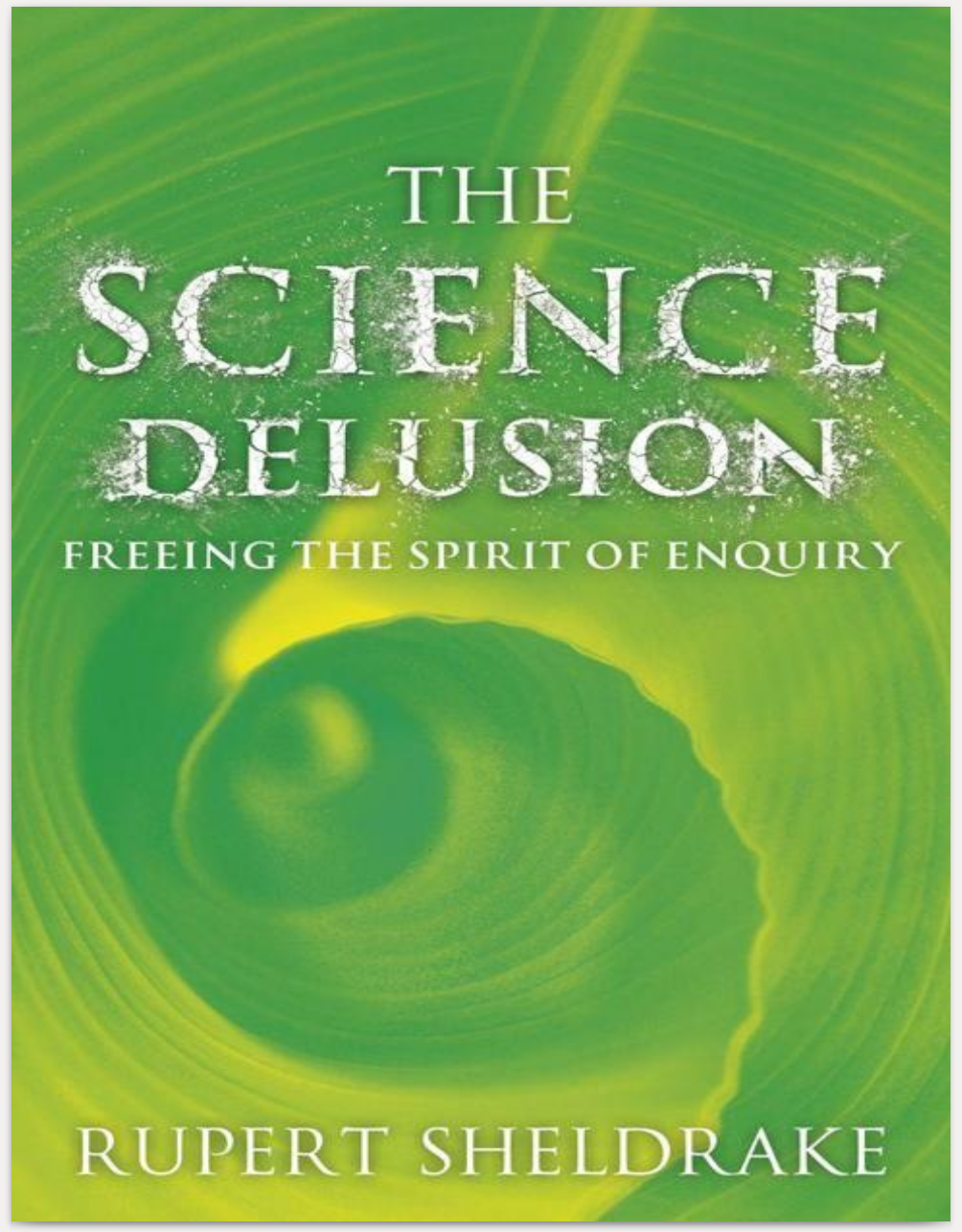

| The Science Delusion by Rupert Sheldrake | Source |

|

In The Science Delusion: Freeing the Spirit of Enquiry (published in the US as Science Set Free), Rupert Sheldrake shows the ways in which science is being constricted by assumptions that have, over the years, hardened into dogmas. Such dogmas are not only limiting, but dangerous for the future of humanity.

According to these principles, all of reality is material or physical; the world is a machine, made up of inanimate matter; nature is purposeless; consciousness is nothing but the physical activity of the brain; free will is an illusion; God exists only as an idea in human minds, imprisoned within our skulls.

But should science be a belief-system, or a method of enquiry? Sheldrake shows that the materialist ideology is moribund; under its sway, increasingly expensive research is reaping diminishing returns while societies around the world are paying the price.

In the skeptical spirit of true science, Sheldrake turns the ten fundamental dogmas of materialism into exciting questions, and shows how all of them open up startling new possibilities for discovery.

Science Set Free will radically change your view of what is real and what is possible.

| Posted on 11 Aug 2020 |

|

Preface Introduction Prologue 1 Is Nature Mechanical? 2 Is the Total Amount of Matter and Energy Always the Same? 3 Are the Laws of Nature Fixed? 4 Is Matter Unconscious? 5 Is Nature Purposeless? 6 Is All Biological Inheritance Material? 7 Are Memories Stored as Material Traces? 8 Are Minds Confined to Brains? 9 Are Psychic Phenomena Illusory? 10 Is Mechanistic Medicine the Only Kind that Really Works? 11 Illusions of Objectivity 12 Scientific Futures Notes References |

3 4 8 17 26 37 46 54 64 76 86 94 105 116 128 138 153 |

My interest in science began when I was very young. As a child I kept many kinds of animals, ranging from caterpillars and tadpoles to pigeons, rabbits, tortoises and a dog. My father, a herbalist, pharmacist and microscopist, taught me about plants from my earliest years. He showed me a world of wonders through his microscope, including tiny creatures in drops of pond water, scales on butterflies’ wings, shells of diatoms, cross-sections of plant stems and a sample of radium that glowed in the dark. I collected plants and read books on natural history, like Fabre’s Book of Insects, which told the life stories of scarab beetles, praying mantises and glow-worms. By the time I was twelve years old I wanted to become a biologist.

I studied sciences at school and then at Cambridge University, where I majored in biochemistry. I liked what I was doing, but found the focus very narrow, and wanted to see a bigger picture. I had a life-changing opportunity to widen my perspective when I was awarded a Frank Knox fellowship in the graduate school at Harvard, where I studied the history and philosophy of science.

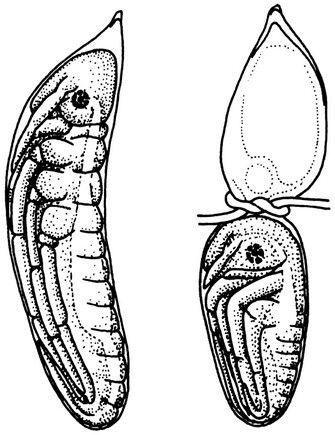

I returned to Cambridge to do research on the development of plants. In the course of my PhD project, I made an original discovery: dying cells play a major part in the regulation of plant growth, releasing the plant hormone auxin as they break down in the process of ‘programmed cell death’. Inside growing plants, new wood cells dissolve themselves as they die, leaving their cellulose walls as microscopic tubes through which water is conducted in stems, roots and veins of leaves. I discovered that auxin is produced as cells die,1 that dying cells stimulate more growth; more growth leads to more death, and hence to more growth.

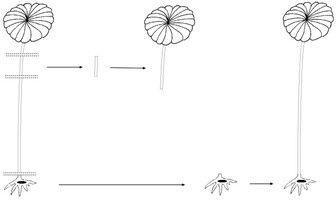

After receiving my PhD, I was elected to a research fellowship of Clare College, Cambridge, where I was director of studies in cell biology and biochemistry, teaching students in tutorials and lab classes. I was then appointed a research fellow of the Royal Society and continued my research at Cambridge on plant hormones, studying the way in which auxin is transported from the shoots towards the root tips. With my colleague Philip Rubery, I worked out the molecular basis of polar auxin transport,2 providing a foundation on which much subsequent research on plant polarity has been built.

Funded by the Royal Society, I spent a year at the University of Malaya, studying rain forest ferns, and at the Rubber Research Institute of Malaya I discovered how the flow of latex in rubber trees is regulated genetically, and I shed new light on the development of latex vessels.3

When I returned to Cambridge, I developed a new hypothesis of ageing in plants and animals, including humans. All cells age. When they stop growing, they eventually die. My hypothesis is about rejuvenation, and proposes that harmful waste products build up in all cells, causing them to age, but they can produce rejuvenated daughter cells by asymmetric cell divisions in which one cell receives most of these waste products and is doomed, while the other is wiped clean. The most rejuvenated of all cells are eggs. In both plants and animals, two successive cell divisions (meiosis) produce an egg cell and three sister cells, which quickly die. My hypothesis was published in Nature in 1974 in a paper called ‘The ageing, growth and death of cells’.4 ‘Programmed cell death’, or ‘apoptosis’, has since become a major field of research, important for our understanding of diseases such as cancer and HIV, as well as tissue regeneration through stem cells. Many stem cells divide asymmetrically, producing a new, rejuvenated stem cell and a cell that differentiates, ages and dies. My hypothesis is that the rejuvenation of stem cells through cell division depends on their sisters paying the price of mortality.

Wanting to broaden my horizons and do practical research that could benefit some of the world’s poorest people, I left Cambridge to join the International Crops Research Institute for the Semi-Arid Tropics, near Hyderabadellar Hyde, India, as Principal Plant Physiologist, working on chickpeas and pigeonpeas.5 We bred new high-yielding varieties of these crops, and developed multiple cropping systems6 that are now widely used by farmers in Asia and Africa, greatly increasing yields.

A new phase in my scientific career began in 1981 with the publication of my book A New Science of Life, in which I suggested a hypothesis of form-shaping fields, called morphogenetic fields, that control the development of animal embryos and the growth of plants. I proposed that these fields have an inherent memory, given by a process called morphic resonance. This hypothesis was supported by the available evidence and gave rise to a range of experimental tests, summarised in the new edition of A New Science of Life (2009).

After my return to England from India, I continued to investigate plant development, and also started research with homing pigeons, which had intrigued me since I kept pigeons as a child. How do pigeons find their way home from hundreds of miles away, across unfamiliar terrain and even across the sea? I thought they might be linked to their home by a field that acted like an invisible elastic band, pulling them homewards. Even if they have a magnetic sense as well, they cannot find their home just by knowing compass directions. If you were parachuted into unknown territory with a compass, you would know where north was, but not where your home was.

I came to realise that pigeon navigation was just one of many unexplained powers of animals. Another was the ability of some dogs to know when their owners are coming home, seemingly telepathically. It was not difficult or expensive to do research on these subjects, and the results were fascinating. In 1994 I published a book called Seven Experiments that Could Change the World in which I proposed low-cost tests that could change our ideas about the nature of reality, with results that were summarised in a new edition (2002), and in my books Dogs That Know When Their Owners Are Coming Home (1999; new edition 2011) and The Sense of Being Stared At (2003).

For the last twenty years I have been a Fellow of the Institute of Noetic Sciences, near San Francisco, and a visiting professor at several universities, including the Graduate Institute in Connecticut. I have published more than eighty papers in peer-reviewed scientific journals, including several in Nature. I belong to a range of scientific societies, including the Society for Experimental Biology and the Society for Scientific Exploration, and I am a fellow of the Zoological Society and the Cambridge Philosophical Society. I give seminars and lectures on my research at a wide variety of universities, research institutes and scientific conferences in Britain, continental Europe, North and South America, India and Australasia.

I have spent all my adult life as a scientist, and I strongly believe in the importance of the scientific approach. Yet I have become increasingly convinced that the sciences have lost much of their vigour, vitality and curiosity. Dogmatic ideology, fear-based conformity and institutional inertia are inhibiting scientific creativity.

With scientific colleagues, I have been struck over and over again by the contrast between public and private discussions. In public, scientists are very aware of the powerful taboos that restrict the range of permissible topics; in private they are often more adventurous.

I have written this book because I believe that the sciences will be more exciting and engaging when they move beyond the dogmas that restrict free enquiry and imprison imaginations.

Many people have contributed to these explorations through discussions, debates, arguments and advice, and I cannot begin to mention everyone to whom I am indebted. This book is dedicated to all those who have helped and encouraged me.

I am grateful for the financial support that has enabled me to write this book: from Trinity College, Cambridge, where I was the Perrott-Warrick Senior Researcher from 2005 to 2010; from Addison Fischer and the Planet Heritage Foundation; and from the Watson Family Foundation and the Institute of Noetic Sciences. I also thank my research assistant, Pamela Smart, and my webmaster, John Caton, for their much-appreciated help.

This book has benefited from many comments on drafts. In particular, I thank Bernard Carr, Angelika Cawdor, Nadia Cheney, John Cobb, Ted Dace, Larry Dossey, Lindy Dufferin and Ava, Douglas Hedley, Francis Huxley, Robert Jackson, Jürgen Krönig, James Le Fanu, Peter Fry, Charlie Murphy, Jill Purce, Anthony Ramsay, Edward St Aubyn, Cosmo Sheldrake, Merlin Sheldrake, Jim Slater, Pamela Smart, Peggy Taylor and Christoffer van Tulleken as well as my agent Jim Levine, in New York, and my editor at Hodder and Stoughton, Mark Booth.

For all those who have helped and encouraged me, especially my wife Jill and our sons Merlin and Cosmo.

The Ten Dogmas of Modern Science

The ‘scientific worldview’ is immensely influential because the sciences have been so successful. They touch all our lives through technologies and through modern medicine. Our intellectual world has been transformed by an immense expansion of knowledge, down into the most microscopic particles of matter and out into the vastness of space, with hundreds of billions of galaxies in an ever-expanding universe.

Yet in the second decade of the twenty-first century, when science and technology seem to be at the peak of their power, when their influence has spread all over the world and when their triumph seems indisputable, unexpected problems are disrupting the sciences from within. Most scientists take it for granted that these problems will eventually be solved by more research along established lines, but some, including myself, think they are symptoms of a deeper malaise.

In this book, I argue that science is being held back by centuries-old assumptions that have hardened into dogmas. The sciences would be better off without them: freer, more interesting, and more fun.

The biggest scientific delusion of all is that science already knows the answers. The details still need working out but, in principle, the fundamental questions are settled.

Contemporary science is based on the claim that all reality is material or physical. There is no reality but material reality. Consciousness is a by-product of the physical activity of the brain. Matter is unconscious. Evolution is purposeless. God exists only as an idea in human minds, and hence in human heads.

These beliefs are powerful, not because most scientists think about them critically but because they don’t. The facts of science are real enough; so are the techniques that scientists use, and the technologies based on them. But the belief system that governs conventional scientific thinking is an act of faith, grounded in a nineteenth-century ideology.

This book is pro-science. I want the sciences to be less dogmatic and more scientific. I believe that the sciences will be regenerated when they are liberated from the dogmas that constrict them.

The scientific creed

Here are the ten core beliefs that most scientists take for granted.

- Everything is essentially mechanical. Dogs, for example, are complex mechanisms, rather than living organisms with goals of their own. Even people are machines, ‘lumbering robots’, in Richard Dawkins’s vivid phrase, with brains that are like genetically programmed computers.

- All matter is unconscious. It has no inner life or subjectivity or point of view. Even human consciousness is an illusion produced by the material activities of brains.

- The total amount of matter and energy is always the same (with the exception of the Big Bang, when all the matter and energy of the universe suddenly appeared).

- The laws of nature are fixed. They are the same today as they were at the beginning, and they will stay the same forever.

- Nature is purposeless, and evolution has no goal or direction.

- All biological inheritance is material, carried in the genetic material, DNA, and in other material structures.

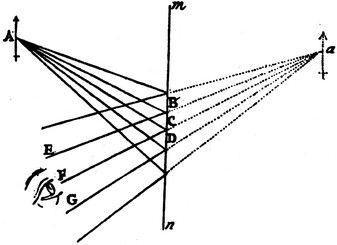

- Minds are inside heads and are nothing but the activities of brains. When you look at a tree, the image of the tree you are seeing is not ‘out there’, where it seems to be, but inside your brain.

- Memories are stored as material traces in brains and are wiped out at death.

- Unexplained phenomena like telepathy are illusory.

- Mechanistic medicine is the only kind that really works.

Together, these beliefs make up the philosophy or ideology of materialism, whose central assumption is that everything is essentially material or physical, even minds. This belief-system became dominant within science in the late nineteenth century, and is now taken for granted. Many scientists are unaware that materialism is an assumption: they simply think of it as science, or the scientific view of reality, or the scientific worldview. They are not actually taught about it, or given a chance to discuss it. They absorb it by a kind of intellectual osmosis.

In everyday usage, materialism refers to a way of life

In the spirit of radical scepticism, I turn each of these ten doctrines into a question. Entirely new vistas open up when a widely accepted assumption is taken as the beginning of an enquiry, rather than as an unquestionable truth. For example, the assumption that nature is machine-like or mechanical becomes a question: ‘Is nature mechanical?’ The assumption that matter is unconscious becomes ‘Is matter unconscious?’ And so on.

In the Prologue I look at the interactions of science, religion and power, and then in Chapters 1 to 10, I examine each of the ten dogmas. At the end of each chapter, I discuss what difference this topic makes and how it affects the way we live our lives. I also pose several further questions, so that any readers who want to discuss these subjects with friends or colleagues will have some useful starting points. Each chapter is followed by a summary.

The credibility crunch for the ‘scientific worldview’

For more than two hundred years, materialists have promised that science will eventually explain everything in terms of physics and chemistry. Science will prove that living organisms are complex machines, minds are nothing but brain activity and nature is purposeless. Believers are sustained by the faith that scientific discoveries will justify their beliefs. The philosopher of science Karl Popper called this stance ‘promissory materialism’ because it depends on issuing promissory notes for discoveries not yet made.1 Despite all the achievements of science and technology, materialism is now facing a credibility crunch that was unimaginable in the twentieth century.

In 1963, when I was studying biochemistry at Cambridge University, I was invited to a series of private meetings with Francis Crick and Sydney Brenner in Brenner’s rooms in King’s College, along with a few of my classmates. Crick and Brenner had recently helped to ‘crack’ the genetic code. Both were ardent materialists and Crick was also a militant atheist. They explained there were two major unsolved problems in biology: development and consciousness. They had not been solved because the people who worked on them were not molecular biologists – or very bright. Crick and Brenner were going to find the answers within ten years, or maybe twenty. Brenner would take developmental biology, and Crick consciousness. They invited us to join them.

Both tried their best. Brenner was awarded the Nobel Prize in 2002 for his work on the development of a tiny worm, Caenorhabdytis elegans. Crick corrected the manuscript of his final paper on the brain the day before he died in 2004. At his funeral, his son Michael said that what made him tick was not the desire to be famous, wealthy or popular, but ‘to knock the final nail into the coffin of vitalism’. (Vitalism is the theory that living organisms are truly alive, and not explicable in terms of physics and chemistry alone.)

Crick and Brenner failed. The problems of development and consciousness remain unsolved. Many details have been discovered, dozens of genomes have been sequenced, and brain scans are ever more precise. But there is still no proof that life and minds can be explained by physics and chemistry alone (see Chapters 1, 4 and 8).

The fundamental proposition of materialism is that matter is the only reality. Therefore consciousness is nothing but brain activity. It is either like a shadow, an ‘epiphenomenon’, that does nothing, or it is just another way of talking about brain activity. However, among contemporary researchers in neuroscience and consciousness studies there is no consensus about the nature of minds. Leading journals such as Behavioural and Brain Sciences and the Journal of Consciousness Studies publish many articles that reveal deep problems with the materialist doctrine. The philosopher David Chalmers has called the very existence of subjective experience the ‘hard problem’. It is hard because it defies explanation in terms of mechanisms. Even if we understand how eyes and brains respond to red light, the experience of redness is not accounted for.

In biology and psychology the credibility rating of materialism is falling. Can physics ride to the rescue? Some materialists prefer to call themselves physicalists, to emphasise that their hopes depend on modern physics, not nineteenth-century theories of matter. But physicalism’s own credibility rating has been reduced by physics itself, for four reasons.

First, some physicists insist that quantum mechanics cannot be formulated without taking into account the minds of observers. They argue that minds cannot be reduced to physics because physics presupposes the minds of physicists.2

Second, the most ambitious unified theories of physical reality, string and M-theories, with ten and eleven dimensions respectively, take science into completely new territory. Strangely, as Stephen Hawking tells us in his book The Grand Design (2010), ‘No one seems to know what the “M” stands for, but it may be “master”, “miracle” or “mystery”.’ According to what Hawking calls ‘model-dependent realism’, different theories may have to be applied in different situations. ‘Each theory may have its own version of reality, but according to model-dependent realism, that is acceptable so long as the theories agree in their predictions whenever they overlap, that is, whenever they can both be applied.’3

String theories and M-theories are currently untestable so ‘model-dependent realism’ can only be judged by reference to other models, rather than by experiment. It also applies to countless other universes, none of which has ever been observed. As Hawking points out,

![]()

M-theory has solutions that allow for different universes with different apparent laws, depending on how the internal space is curled. M-theory has solutions that allow for many different internal spaces, perhaps as many as 10500, which means it allows for 10500 different universes, each with its own laws … The original hope of physics to produce a single theory explaining the apparent laws of our universe as the unique possible consequence of a few simple assumptions may have to be abandoned.4

Some physicists are deeply sceptical about this entire approach, as the theoretical physicist Lee Smolin shows in his book The Trouble With Physics: The Rise of String Theory, the Fall of a Science and What Comes Next (2008).5 String theories, M-theories and ‘model-dependent realism’ are a shaky foundation for materialism or physicalism or any other belief system, as discussed in Chapter 1.

Third, since the beginning of the twenty-first century, it has become apparent that the known kinds of matter and energy make up only about four per cent of the universe. The rest consists of ‘dark matter’ and ‘dark energy'. The nature of 96 per cent of physical reality is literally obscure (see Chapter 2).

Fourth, the Cosmological Anthropic Principle asserts that if the laws and constants of nature had been slightly different at the moment of the Big Bang, biological life could never have emerged, and hence we would not be here to think about it (see Chapter 3). So did a divine mind fine-tune the laws and constants in the beginning? To avoid a creator God emerging in a new guise, most leading cosmologists prefer to believe that our universe is one of a vast, and perhaps infinite, number of parallel universes, all with different laws and constants, as M-theory also suggests. We just happen to exist in the one that has the right conditions for us.6

This multiverse theory is the ultimate violation of Occam’s Razor, the philosophical principle that ‘entities must not be multiplied beyond necessity’, or in other words, that we should make as few assumptions as possible. It also has the major disadvantage of being untestable.7 And it does not even succeed in getting rid of God. An infinite God could be the God of an infinite number of universes.8

Materialism provided a seemingly simple, straightforward worldview in the late nineteenth century, but twenty-first-century science has left it behind. Its promises have not been fulfilled, and its promissory notes have been devalued by hyperinflation.

I am convinced that the sciences are being held back by assumptions that have hardened into dogmas, maintained by powerful taboos. These beliefs protect the citadel of established science, but act as barriers against open-minded thinking.

Science, Religion and Power

Since the late nineteenth century, science has dominated and transformed the earth. It has touched everyone’s lives through technology and modern medicine. Its intellectual prestige is almost unchallenged. Its influence is greater than that of any other system of thought in all of human history. Although most of its power comes from its practical applications, it also has a strong intellectual appeal. It offers new ways of understanding the world, including the mathematical order at the heart of atoms and molecules, the molecular biology of genes, and the vast sweep of cosmic evolution.

The scientific priesthood

Francis Bacon (1561–1626), a politician and lawyer who became Lord Chancellor of England, foresaw the power of organised science more than anyone else. To clear the way, he needed to show that there was nothing sinister about acquiring power over nature. When he was writing, there was a widespread fear of witchcraft and black magic, which he tried to counteract by claiming that knowledge of nature was God-given, not inspired by the devil. Science was a return to the innocence of the first man, Adam, in the Garden of Eden before the Fall.

Bacon argued that the first book of the Bible, Genesis, justified scientific knowledge. He equated man’s knowledge of nature with Adam’s naming of the animals. God ‘brought them unto Adam to see what he would call them, and what Adam called every living creature, that was the name thereof’. (Genesis 2: 19–20) This was literally man’s knowledge, because Eve was not created until two verses later. Bacon argued that man’s technological mastery of nature was the recovery of a God-given power, rather than something new. He confidently assumed that people would use their new knowledge wisely and well: ‘Only let the human race recover that right over nature which belongs to it by divine bequest; the exercise thereof will be governed by sound reason and true religion.’1

The key to this new power over nature was organised institutional research. In New Atlantis (1624), Bacon described a technocratic Utopia in which a scientific priesthood made decisions for the good of the state as a whole. The Fellows of this scientific ‘Order or Society’ wore long robes and were treated with a respect that their power and dignity required. The head of the order travelled in a rich chariot, under a radiant golden image of the sun. As he rode in procession, ‘he held up his bare hand, as he went, as blessing the people’.

The general purpose of this foundation was ‘the knowledge of causes and secret motions of things; and the enlarging of human empire, to the effecting of all things possible’. The Society was equipped with machinery and facilities for testing explosives and armaments, experimental furnaces, gardens for plant breeding, and dispensaries.2

This visionary scientific institution foreshadowed many features of institutional research, and was a direct inspiration for the founding of the Royal Society in London in 1660, and for many other national academies of science. But although the members of these academies were often held in high esteem, none achieved the grandeur and political power of Bacon’s imaginary prototypes. Their glory was continued even after their deaths in a gallery, like a Hall of Fame, where their images were preserved. ‘For upon every invention of value we erect a statue to the inventor, and give him a liberal and honourable reward.’3

In England in Bacon’s time (and still today) the Church of England was linked to the state as the Established Church. Bacon envisaged that the scientific priesthood would also be linked to the state through state patronage, forming a kind of established church of science. And here again he was prophetic. In nations both capitalist and Communist, the official academies of science remain the centres of power of the scientific establishment. There is no separation of science and state. Scientists play the role of an established priesthood, influencing government policies on the arts of warfare, industry, agriculture, medicine, education and research.

Bacon coined the ideal slogan for soliciting financial support from governments and investors: ‘Knowledge is power.’4 But the success of scientists in eliciting funding from governments varied from country to country. The systematic state funding of science began much earlier in France and Germany than in Britain and the United States where, until the latter half of the nineteenth century, most research was privately funded or carried out by wealthy amateurs like Charles Darwin.5

In France, Louis Pasteur (1822–95) was an influential proponent of science as a truth-finding religion, with laboratories like temples through which mankind would be elevated to its highest potential:

![]()

Take interest, I beseech you, in those sacred institutions which we designate under the expressive name of laboratories. Demand that they be multiplied and adorned; they are the temples of wealth and of the future. There it is that humanity grows, becomes stronger and better.6

By the beginning of the twentieth century, science was almost entirely institutionalised and professionalised, and after the Second World War expanded enormously under government patronage, as well as through corporate investment.7 The highest level of funding is in the United States, where in 2008 the total expenditure on research and development was $398 billion, of which $104 billion came from the government.8 But governments and corporations do not usually pay scientists to do research because they want innocent knowledge, like that of Adam before the Fall. Naming animals, as in classifying endangered species of beetles in tropical rainforests, is a low priority. Most funding is a response to Bacon’s persuasive slogan ‘knowledge is power’.

By the 1950s, when institutional science had reached an unprecedented level of power and prestige, the historian of science George Sarton approvingly described the situation in a way that sounds like the Roman Catholic Church before the Reformation:

![]()

Truth can be determined only by the judgement of experts … Everything is decided by very small groups of men, in fact, by single experts whose results are carefully checked, however, by a few others. The people have nothing to say but simply to accept the decisions handed out to them. Scientific activities are controlled by universities, academies and scientific societies, but such control is as far removed from popular control as it possibly could be.9

Bacon’s vision of a scientific priesthood has now been realised on a global scale. But his confidence that man’s power over nature would be guided by ‘sound reason and true religion’ was misplaced.

The fantasy of omniscience

The fantasy of omniscience is a recurrent theme in the history of science, as scientists aspire to a total godlike knowledge. At the beginning of the nineteenth century, the French physicist Pierre-Simon Laplace imagined a scientific mind capable of knowing and predicting everything:

![]()

Consider an intelligence which, at any instant, could have a knowledge of all the forces controlling nature together with the momentary conditions of all the entities of which nature consists. If this intelligence were powerful enough to submit all these data to analysis it would be able to embrace in a single formula the movements of the largest bodies in the universe and those of the lightest atoms; for it nothing would be uncertain; the past and future would be equally present for its eyes.10

These ideas were not confined to physicists. Thomas Henry Huxley, who did so much to propagate Darwin’s theory of evolution, extended mechanical determinism to cover the entire evolutionary process:

![]()

If the fundamental proposition of evolution is true, that the entire world, living and not living, is the result of the mutual interaction, according to definite laws, of the forces possessed by the molecules of which the primitive nebulosity of the universe was composed, it is no less certain the existing world lay, potentially, in the cosmic vapour, and that a sufficient intellect could, from a knowledge of the properties of the molecules of that vapour, have predicted, say, the state of the fauna of Great Britain in 1869.11

When the belief in determinism was applied to the activity of the human brain, it resulted in a denial of free will, on the grounds that everything about the molecular and physical activities of the brain was in principle predictable. Yet this conviction rested not on scientific evidence, but simply on the assumption that everything was fully determined by mathematical laws.

Even today, many scientists assume that free will is an illusion. Not only is the activity of the brain determined by machine-like processes, but there is no non-mechanical self capable of making choices. For example, in 2010, the British brain scientist Patrick Haggard asserted, ‘As a neuroscientist, you’ve got to be a determinist. There are physical laws, which the electrical and chemical events in the brain obey. Under identical circumstances, you couldn’t have done otherwise. There’s no “I” which can say, “I want to do otherwise.”’12 However, Haggard does not let his scientific beliefs interfere with his personal life: ‘I keep my scientific and personal lives pretty separate. I still seem to decide what films I go to see, I don’t feel it’s predestined, though it must be determined somewhere in my brain.’

Indeterminism and chance

In 1927, with the recognition of the uncertainty principle in quantum physics, it became clear that indeterminism was an essential feature of the physical world, and physical predictions could be made only in terms of probabilities. The fundamental reason is that quantum phenomena are wavelike, and a wave is by its very nature spread out in space and time: it cannot be localised at a single point at a particular instant; or, more technically, its position and momentum cannot both be known precisely.13 Quantum theory deals in statistical probabilities, not certainties. The fact that one possibility is realised in a quantum event rather than another is a matter of chance.

Does quantum indeterminism affect the question of free will? Not if indeterminism is purely random. Choices made at random are no freer than if they are fully determined.14

In neo-Darwinian evolutionary theory randomness plays a central role through the chance mutations of genes, which are quantum events. With different chance events, evolution would happen differently. T. H. Huxley was wrong in believing that the course of evolution was predictable. ‘Replay the tape of life,’ said the evolutionary biologist Stephen Jay Gould, ‘and a different set of survivors would grace our planet today.’15

In the twentieth century it became clear that not just quantum processes but almost all natural phenomena are probabilistic, including the turbulent flow of liquids, the breaking of waves on the seashore, and the weather: they show a spontaneity and indeterminism that eludes exact prediction. Weather forecasters still get it wrong in spite of having powerful computers and a continuous stream of data from satellites. This is not because they are bad scientists but because weather is intrinsically unpredictable in detail. It is chaotic, not in the everyday sense that there is no order at all, but in the sense that it is not precisely predictable. To some extent, the weather can be modelled mathematically in terms of chaotic dynamics, sometimes called ‘chaos theory’, but these models do not make exact predictions.16 Certainty is as unachievable in the everyday world as it is in quantum physics. Even the orbits of the planets around the sun, long considered the centrepiece of mechanistic science, turn out to be chaotic over long time scales.17

The belief in determinism, strongly held by many nineteenth- and early-twentieth-century scientists, turned out to be a delusion. The freeing of scientists from this dogma led to a new appreciation of the indeterminism of nature in general, and of evolution in particular. The sciences have not come to an end by abandoning the belief in determinism. Likewise, they will survive the loss of the dogmas that still bind them; they will be regenerated by new possibilities.

Further fantasies of omniscience

By the end of the nineteenth century, the fantasy of scientific omniscience went far beyond a belief in determinism. In 1888, the Canadian-American astronomer Simon Newcomb wrote, ‘We are probably nearing the limit of all we can know about astronomy.’ In 1894, Albert Michelson, later to win the Nobel Prize for Physics, declared, ‘The more important fundamental laws and facts of physical science have all been discovered, and these are now so firmly established that the possibility of their ever being supplanted in consequence of new discoveries is exceedingly remote … Our future discoveries must be looked for in the sixth place of decimals.’18 And in 1900 William Thomson, Lord Kelvin, the physicist and inventor of intercontinental telegraphy, expressed this supreme confidence in an often-quoted (although perhaps apocryphal) claim: ‘There is nothing new to be discovered in physics now. All that remains is more and more precise measurement.’

These convictions were shattered in the twentieth century through quantum physics, relativity theory, nuclear fission and fusion (as in atom and hydrogen bombs), the discovery of galaxies beyond our own, and the Big Bang theory – the idea that the universe began very small and very hot some 14 billion years ago and has been growing, cooling and evolving ever since.

Nevertheless, by the end of the twentieth century, the fantasy of omniscience was back again, this time fuelled by the triumphs of twentieth-century physics and by the discoveries of neurobiology and molecular biology. In 1997, John Horgan, a senior science writer at Scientific American, published a book called The End of Science: Facing the Limits of Knowledge in the Twilight of the Scientific Age. After interviewing many leading scientists, he advanced a provocative thesis:

![]()

If one believes in science, one must accept the possibility – even the probability – that the great era of scientific discovery is over. By science I mean not applied science, but science at its purest and greatest, the primordial human quest to understand the universe and our place in it. Further research may yield no more great revelations or revolutions, but only incremental, diminishing returns.19

Horgan is surely right that once something has been discovered – like the structure of DNA – it cannot go on being discovered. But he took it for granted that the tenets of conventional science are true. He assumed that the most fundamental answers are already known. They are not, and every one of them can be replaced by more interesting and fruitful questions, as I show in this book.

Science and Christianity

The founders of mechanistic science in the seventeenth century, including Johannes Kepler, Galileo Galilei, René Descartes, Francis Bacon, Robert Boyle and Isaac Newton, were all practising Christians. Kepler, Galileo and Descartes were Roman Catholics; Bacon, Boyle and Newton Protestants. Boyle, a wealthy aristocrat, was exceptionally devout, and spent large amounts of his own money to promote missionary activity in India. Newton devoted much time and energy to biblical scholarship, with a particular interest in the dating of prophecies. He calculated that the Day of Judgment would occur between the years 2060 and 2344, and set out the details in his book Observations on the Prophecies of Daniel and the Apocalypse of St John.20

Seventeenth-century science created a vision of the universe as a machine intelligently designed and started off by God. Everything was governed by eternal mathematical laws, which were ideas in the mind of God. This mechanistic philosophy was revolutionary precisely because it rejected the animistic view of nature taken for granted in medieval Europe, as discussed in Chapter 1. Until the seventeenth century, university scholars and Christian theologians taught that the universe was alive, pervaded by the Spirit of God, the divine breath of life. All plants, animals and people had souls. The stars, the planets and the earth were living beings, guided by angelic intelligences.

Mechanistic science rejected these doctrines and expelled all souls from nature. The material world became literally inanimate, a soulless machine. Matter was purposeless and unconscious; the planets and stars were dead. In the entire physical universe, the only non-mechanical entities were human minds, which were immaterial, and part of a spiritual realm that included angels and God. No one could explain how minds related to the machinery of human bodies, but René Descartes speculated that they interacted in the pineal gland, the small pine-cone-shaped organ nestled between the right and left hemispheres near the centre of the brain.21

After some initial conflicts, most notably the trial of Galileo by the Roman Inquisition in 1633, science and Christianity were increasingly confined to separate realms by mutual consent. The practice of science was fairly free from religious interference, and religion fairly free from conflict with science, at least until the rise of militant atheism at the end of the eighteenth century. Science’s domain was the material universe, including human bodies, animals, plants, stars and planets. Religion’s realm was spiritual: God, angelent: God, spirits and human souls. This more or less peaceful coexistence served the interests of both science and religion. Even in the late twentieth century Stephen Jay Gould still defended this arrangement as a ‘sound position of general consensus’. He called it the doctrine of Non-overlapping Magisteria. The magisterium of science covers ‘the empirical realm: what the Universe is made of (fact) and why does it work in this way (theory). The magisterium of religion extends over questions of ultimate meaning and moral value.’22

However, from around the time of the French Revolution (1789–99), militant materialists rejected this principle of dual magisteria, dismissing it as intellectually dishonest, or seeing it as a refuge for the feeble-minded. They recognised only one reality: the material world. The spiritual realm did not exist. Gods, angels and spirits were figments of the human imagination, and human minds were nothing but aspects or by-products of brain activity. There were no supernatural agencies that interfered with the mechanical course of nature. There was only one magisterium: the magisterium of science.

Atheist beliefs

The materialist philosophy achieved its dominance within institutional science in the second half of the nineteenth century, and was closely linked to the rise of atheism in Europe. Twenty-first-century atheists, like their predecessors, take the doctrines of materialism to be established scientific facts, not just assumptions.

When it was combined with the idea that the entire universe was like a machine running out of steam, according to the second law of thermodynamics, materialism led to the cheerless worldview expressed by the philosopher Bertrand Russell:

![]()

That man is the product of causes which had no prevision of the end they were achieving; that his origin, his growth, his hopes and fears, his loves and beliefs, are but the outcome of accidental collisions of atoms; that no fire, no heroism, no intensity of thought and feeling, can preserve an individual life beyond the grave; that all the labours of the ages, all the devotion, all the inspiration, all the noonday brightness of human genius, are destined to extinction in the vast death of the solar system; and that the whole temple of Man’s achievement must inevitably be buried beneath the debris of a universe in ruins – all these things, if not quite beyond dispute, are yet so nearly certain, that no philosophy which rejects them can hope to stand. Only within the scaffolding of these truths, only on the firm foundation of unyielding despair, can the soul’s habitation henceforth be built.23

How many scientists believe in these ‘truths’? Some accept them without question. But many scientists have philosophies or religious faiths that make this ‘scientific worldview’ seem limited, at best a half-truth. In addition, within science itself, evolutionary cosmology, quantum physics and consciousness studies make the standard dogmas of science look old-fashioned.

It is obvious that science and technology have transformed the world. Science is brilliantly successful when applied to making machines, increasing agricultural yields and developing cures for diseases. Its prestige is immense. Since its beginnings in seventeenth-century Europe, mechanistic science has spread worldwide through European empires and European ideologies, like Marxism, socialism and free-market capitalism. It has touched the lives of billions of people through economic and technological development. The evangelists of science and technology have succeeded beyond the wildest dreams of the missionaries of Christianity. Never before has any system of ideas dominated all humanity. Yet despite these overwhelming successes, science still carries the ideological baggage inherited from its European past.

Science and technology are welcomed almost everywhere because of the obvious material benefits they bring, and the materialist philosophy is part of the package deal. However, religious beliefs and the pursuit of a scientific career can interact in surprising ways. As an Indian scientist wrote in the scientific journal Nature in 2009,

![]()

[In India] science is neither the ultimate form of knowledge nor a victim of scepticism … My observations as a research scientist of more than 30 years’ standing suggest that most scientists in India conspicuously evoke the mysterious powers of gods and goddesses to help them achieve success in professional matters such as publishing papers or gaining recognition.24

All over the world, scientists know that the doctrines of materialism are the rules of the game during working hours. Few professional scientists challenge them openly, at least before they retire or get a Nobel Prize. And in deference to the prestige of science, most educated people are prepared to go along with the orthodox creed in public, whatever their private opinions.

However, some scientists and intellectuals are deeply committed atheists, and the materialist philosophy is central to their belief system. A minority become missionaries, filled with evangelical zeal. They see themselves as old-style crusaders fighting for science and reason against the forces of superstition, religion and credulity. Several books putting forward this stark opposition were bestsellers in the 2000s, including Sam Harris’s The End of Faith: Religion, Terror, and the Future of Reason ( 2004 ), Daniel Dennett’s Breaking the Spell (2006), Christopher Hitchens’s God Is Not Great: How Religion Poisons Everything (2007) and Richard Dawkins’s The God Delusion (2006), which by 2010 had sold two million copies in English, and was translated into thirty-four other languages.25 Until he retired in 2008, Dawkins was Professor of the Public Understanding of Science at the University of Oxford.

But few atheists believe in materialism alone. Most are also secular humanists, for whom a faith in God has been replaced by a faith in humanity. Humans approach a godlike omniscience through science. God does not affect the course of human history. Instead, humans have taken charge themselves, bringing about progress through reason, science, technology, education and social reform.

Mechanistic science in itself gives no reason to suppose that there is any point in life, or purpose in humanity, or that progress is inevitable. Instead it asserts that the universe is ultimately purposeless, and so is human life. A consistent atheism stripped of the humanist faith paints a bleak picture with little ground for hope, as Bertrand Russell made so clear. But secular humanism arose within a Judaeo-Christian culture and inherited from Christianity a belief in the unique importance of human life, together with a faith in future salvation. Secular humanism is in many ways a Christian heresy, in which man has replaced God.26

Secular humanism makes atheism palatable because it surrounds it with a reassuring faith in progress rather than provable facts. Instead of redemption by God, humans themselves will bring about human salvation through science, reason and social reform.27

Whether or not they share this faith in human progress, all materialists assume that science will eventually prove that their beliefs are true. But this too is a matter of faith.

Dogmas, beliefs and free enquiry

It is not anti-scientific to question established beliefs, but central to sentience itself. At the creative heart of science is a spirit of open-minded enquiry. Ideally, science is a process, not a position or a belief system. Innovative science happens when scientists feel free to ask new questions and build new theories.

In his influential book The Structure of Scientific Revolutions (1962), the historian of science Thomas Kuhn argued that in periods of ‘normal’ science, most scientists share a model of reality and a way of asking questions that he called a paradigm. The ruling paradigm defines what kinds of questions scientists can ask and how they can be answered. Normal science takes place within this framework and scientists usually explain away anything that does not fit. Anomalous facts accumulate until a crisis point is reached. Revolutionary changes happen when researchers adopt more inclusive frameworks of thought and practice, and are able to incorporate facts that were previously dismissed as anomalies. In due course the new paradigm becomes the basis of a new phase of normal science.28

Kuhn helped focus attention on the social aspect of science and reminded us that science is a collective activity. Scientists are subject to all the usual constraints of human social life, including peer-group pressure and the need to conform to the norms of the group. Kuhn’s arguments were largely based on the history of science, but sociologists of science have taken his insights further by studying science as it is actually practised, looking at the ways that scientists build up networks of support, use resources and results to increase their power and influence, and compete for funding, prestige and recognition.

Bruno Latour’s Science in Action: How to Follow Scientists and Engineers Through Society (1987) is one of the most influential studies in this tradition. Latour observed that scientists routinely make a distinction between knowledge and beliefs. Scientists within their professional group know about the phenomena covered by their field of science, while those outside the network have only distorted beliefs. When scientists think about people outside their groups, they often wonder how they can still be so irrational:

![]()

[T]he picture of non-scientists drawn by scientists becomes bleak: a few minds discover what reality is, while the vast majority of people have irrational ideas or at least are prisoners of many social, cultural and psychological factors that make them stick obstinately to obsolete prejudices. The only redeeming aspect of this picture is that if it were only possible to eliminate all these factors that hold people prisoners of their prejudices, they would all, immediately and at no cost, become as sound-minded as the scientists, grasping the phenomena without further ado. In every one of us there is a scientist who is asleep, and who will not wake up until social and cultural conditions are pushed aside.29

For believers in the ‘scientific worldview’, all that is needed is to increase the public understanding of science through education and the media.

Since the nineteenth century, a belief in materialism has indeed been propagated with remarkable success: millions of people have been converted to this ‘scientific’ view, even though they know very little about science itself. They are, as it were, devotees of the Church of Science, or of scientism, of which scientists are the priests. This is how a prominent atheist layman, Ricky Gervais, expressed these attitudes in the Wall Street Journal in 2010, the same year that he was on the Time magazine list of the 100 most influential people in the world. Gervais is an entertainer, not a scientist or an original thinker, but he borrows the authority of science to support his atheism:

![]()

Science seeks the truth. And it does not discriminate. For better or worse it finds things out. Science is humble. It knows what it knows and it knows what it doesn’t know. It bases its conclusions and beliefs on hard evidence – evidence that is constantly updated and upgraded. It doesn’t get offended when new facts come along. It embraces the body of knowledge. It doesn’t hold onto medieval practices because they are tradition.30

Gervais’s idealised view of science is hopelessly naïve in the context of the history and sociology of science. It portrays scientists as open-minded seekers of truth, not ordinary people competing for funds and prestige, constrained by peer-group pressures and hemmed in by prejudices and taboos. Yet naïve as it is, I take this ideal of free enquiry seriously. This book is an experiment in which I apply these ideals to science itself. By turning assumptions into questions I want to find out what science really knows and doesn’t know. I look at the ten core doctrines of materialism in the light of hard evidence and recent discoveries. I assume that true scientists will not be offended when new facts come along, and that they will not hold onto the materialist worldview just because it’s traditional.

I am doing this because the spirit of enquiry has continually liberated scientific thinking from unnecessary limitations, whether imposed from within or without. I am convinced that the sciences, for all these successes, are being stifled by outmoded beliefs.

Photo Hanna-Katrina Jedrosz

|

Rupert Sheldrake is a biologist and author of more than 90 scientific papers and 9 books, and the co-author of 6 books. His books have been published in 28 languages. He was among the top 100 Global Thought Leaders for 2013, as ranked by the Duttweiler Institute, Zurich, Switzerland's leading think tank. On ResearchGate, the largest scientific and academic online network, his RG score of 33.5 puts him among the top 7.5% of researchers, based on citations of his peer-reviewed publications. On Google Scholar, the many citations of his work give him a high h-index of 38, and an i10 index of 102.

He studied natural sciences at Cambridge University, where he was a Scholar of Clare College, took a double first class honours degree and was awarded the University Botany Prize (1962). He then studied philosophy and history of science at Harvard University, where he was a Frank Knox Fellow (1963-64), before returning to Cambridge, where he took a Ph.D. in biochemistry (1967). He was a Fellow of Clare College, Cambridge (1967-73), where he was Director of Studies in biochemistry and cell biology. As the Rosenheim Research Fellow of the Royal Society (1970-73), he carried out research on the development of plants and the ageing of cells in the Department of Biochemistry at Cambridge University. While at Cambridge, together with Philip Rubery, he discovered the mechanism of polar auxin transport, the process by which the plant hormone auxin is carried from the shoots towards the roots.

Malaysia and India

From 1968 to 1969, as a Royal Society Leverhulme Scholar, based in the Botany Department of the University of Malaya, Kuala Lumpur, he studied rain forest plants. From 1974 to 1985 he was Principal Plant Physiologist and Consultant Physiologist at the International Crops Research Institute for the Semi-Arid Tropics (ICRISAT) in Hyderabad, India, where he helped develop new cropping systems now widely used by farmers. While in India, he also lived for a year and a half at the ashram of Fr Bede Griffiths in Tamil Nadu, where he wrote his first book, A New Science of Life, published in 1981 (new edition 2009).

Cambridge, 1970

|

Experimental research

Since 1981, he has continued research on developmental and cell biology. He has also investigated unexplained aspects of animal behaviour, including how pigeons find their way home, the telepathic abilities of dogs, cats and other animals, and the apparent abilities of animals to anticipate earthquakes and tsunamis. He subsequently studied similar phenomena in people, including the sense of being stared at, telepathy between mothers and babies, telepathy in connection with telephone calls, and premonitions. Although some of these areas overlap the field of parapsychology, he approaches them as a biologist, and bases his research on natural history and experiments under natural conditions, as opposed to laboratory studies. His research on these subjects is summarized in his books Seven Experiments That Could Change the World (1994, second edition 2002), Dogs That Know When Their Owners Are Coming Home (1999, new edition 2011) and The Sense of Being Stared At (2003, new edition 2012).

Recent books

The Science Delusion in the UK and Science Set Free in the US, examines the ten dogmas of modern science, and shows how they can be turned into questions that open up new vistas of scientific possibility. This book received the Book of the Year Award from the British Scientific and Medical Network. His two most recent books Science and Spiritual Practices and Ways to Go Beyond And Why They Work are about rediscovering ways of connecting with the more-than-human world through direct experience.

Academic appointments

Since 1985, he has taught every year on courses at Schumacher College, in Devon, England, and has been part of the faculty for the MSc in Holistic Science programme since its inception in 1998. In 2000, he was the Steinbach Scholar in Residence at the Woods Hole Oceanographic Institute in Cape Cod, Massachusetts. From 2003-2011, Visiting Professor and Academic Director of the Holistic Thinking Program at the Graduate Institute, Connecticut, USA. In 2005, Visiting Professor of Evolutionary Science at the Wisdom University, Oakland, California. From 2005-2010 he was the Director of the Perrott-Warrick Project, funded from Trinity College, Cambridge University. He is currently a Fellow of the Institute of Noetic Sciences in California, a Fellow of Schumacher College in Devon, England, and a Fellow of the Temenos Academy, London.

Awards

In 1960, he was awarded a Major Open Scholarship in Natural Sciences at Clare College Cambridge, In 1962 he received the Cambridge University Frank Smart Prize for Botany, and in 1963 the Clare College Greene Cup for General Learning. In 1994, he won the Book of the Year Award from The Institute for Social Inventions for his book Seven Experiments That Could Change the World; In 1999 the Book of the Year Award from the Scientific and Medical Network for his book Dogs That Know When their Owners Are Coming Home, and won the same award again in 2012 for his book The Science Delusion (Science Set Free in the US).

He received the 2014 Bridgebuilder Award at Loyola Marymount University, Los Angeles, a prize established by the Doshi family "to honor an individual or organization dedicated to fostering understanding between cultures, peoples and disciplines." In 2015, in Venice, Italy, he was awarded the first Lucia Torri Cianci prize for innovative thinking.

Family

He lives in London with his wife Jill Purce. They have two sons, Merlin, who received his PhD at Cambridge University in 2016 for his work in tropical ecology at the Smithsonian Tropical Research Institute in Panama, and Cosmo, a musician.

Books by Rupert Sheldrake

Ways to Go Beyond And Why They Work: Seven Spiritual Practices in a Scientific Age (Coronet, London, 2019)

Science and Spiritual Practices: Reconnecting Through Direct Experience (Coronet, London, 2017; Counterpoint, Berkeley, 2018)

The Science Delusion: Freeing the Spirit of Enquiry (Hodder and Stoughton, London, 2012; published in the US as Science Set Free: 10 Paths to New Discovery, Random House, 2012)

The Sense of Being Stared At, And Other Aspects of the Extended Mind (Hutchinson, London, 2003; Crown, New York; new edition, 2013, Inner Traditions International)

Dogs that Know When Their Owners are Coming Home, and Other Unexplained Powers of Animals (Hutchinson, London, 1999; Crown, New York; new edition, 2011, Random House, New York)

Seven Experiments that Could Change the World: A Do-It-Yourself Guide to Revolutionary Science (Fourth Estate, London, 1994; Riverside/Putnams, New York, 1995; new edition, 2002, Inner Traditions International)

The Rebirth of Nature: The Greening of Science and God (Century, London,1992; Bantam, New York)

The Presence of the Past: Morphic Resonance and the Habits of Nature (Collins, London, 1988; Times Books, New York; new edition, 2012, Icon Books)

A New Science of Life: The Hypothesis of Formative Causation (Blond and Briggs, London, 1981; Tarcher, Los Angeles, 1982; new edition, 2009, Icon Books)

With Michael Shermer

Arguing Science (Monkfish Book Publishing, 2016)

With Kate Banks

Boy’s Best Friend (Farrar, Strauss, Giroud, New York, 2015)

With Ralph Abraham and Terence McKenna

The Evolutionary Mind (Monkfish Publishing, 2005)

Chaos, Creativity and Cosmic Consciousness (Inner Traditions International, 2001)

With Matthew Fox

Natural Grace: Dialogues on Science and Spirituality (Bloomsbury, London, 1996; Doubleday, New York)

The Physics of Angels (Harper San Francisco, 1996)

Anthologies

Essay in How I Found God in Everyone and Everywhere: An Anthology of Spiritual Memoirs (Monkfish, 2018)

Talks and Workshops

Rupert Sheldrake has spoken in many scientific and medical conferences, and has given lectures and seminars in a wide range of universities, including the following (from 2008-2019):

Aarhus University, DenmarkAmsterdam University, Holland

Bath Spa University

Bath University

Birkbeck College, London

California Institute of Integral Studies, San Francisco

Cambridge University

Christchurch University, Canterbury

City University, London

Ecole des Beaux-Arts, Paris

Europa University, Frankfurt (Oder), Germany

Goldsmiths College, London

Greenwich University, London

Groningen University, Holland

Haverford College, Pennsylvania

Imperial College, London

Innsbruck University, Austria

Kings College, London

Leiden University, Holland

Manchester Metropolitan University

Middlesex University

New York University

Niels Bohr Institute at Copenhagen University

Northampton University

Oxford University

Royal College of Art, London

Sainsbury Laboratory, Cambridge University

Schumacher College/University of Plymouth

Sussex University

Trinity College, Dublin

University College, Dublin

University College, London

Vienna University

Vienna: Sigmund Freud University

Vienna: Universität für Bodenkultur

Wageningen University, Holland

Winchester University

www.hodder.co.uk

First published in Great Britain in 2012 by Coronet

An imprint of Hodder & Stoughton

An Hachette UK company

Copyright © Rupert Sheldrake 2012

The right of Rupert Sheldrake to be identified as the Author of the

Work has been asserted by him in accordance with the Copyright,

Designs and Patents Act 1988.

All rights reserved. No part of this publication may be reproduced,

stored in a retrieval system, or transmitted, in any form or by any

means without the prior written permission of the publisher, nor be

otherwise circulated in any form of binding or cover other than that

in which it is published and without a similar condition being

imposed on the subsequent purchaser.

A CIP catalogue record for this title is available from the British Library

ISBN 978 1 444 72795 1

Hodder & Stoughton Ltd

338 Euston Road

London NW1 3BH

Many people who have not studied science are baffled by scientists’ insistence that animal and plants are machines, and that humans are robots too, controlled by computer-like brains with genetically programmed software. It seems more natural to assume that we are living organisms, and so are animals and plants. Organisms are self-organising; they form and maintain themselves, and have their own ends or goals. Machines, by contrast, are designed by an external mind; their parts are put together by external machine-makers and they have no purposes or ends of their own.

The starting point for modern science was the rejection of the older, organic view of the universe. The machine metaphor became central to scientific thinking, with very far-reaching consequences. In one way it was immensely liberating. New ways of thinking became possible that encouraged the invention of machines and the evolution of technology. In this chapter, I trace the history of this idea, and show what happens when we question it.

Before the seventeenth century, almost everyone took for granted that the universe was like an organism, and so was the earth. In classical, medieval and Renaissance Europe, nature was alive. Leonardo da Vinci (1452–1519), for example, made this idea explicit: ‘We can say that the earth has a vegetative soul, and that its flesh is the land, its bones are the structure of the rocks … its breathing and its pulse are the ebb and flow of the sea.’1 William Gilbert (1540–1603), a pioneer of the science of magnetism, was explicit in his organic philosophy of nature: ‘We consider that the whole universe is animated, and that all the globes, all the stars, and also the noble earth have been governed since the beginning by their own appointed souls and have the motives of self-conservation.’2

Even Nicholas Copernicus, whose revolutionary theory of the movement of the heavens, published in 1543, placed the sun at the centre rather than the earth was no mechanist. His reasons for making this change were mystical as well as scientific. He thought a central position dignified the sun:

![]()

Not unfittingly do some call it the light of the world, others the soul, still others the governor. Tremigmpliistus calls it the visible God: Sophocles’ Electra, the All-seer. And in fact does the sun, seated on his royal throne, guide his family of planets as they circle around him.3

Copernicus’s revolution in cosmology was a powerful stimulus for the subsequent development of physics. But the shift to the mechanical theory of nature that began after 1600 was much more radical.

For centuries, there had already been mechanical models of some aspects of nature. For example, in Wells Cathedral, in the west of England, there is a still-functioning astronomical clock installed more than six hundred years ago. The clock’s face shows the sun and moon revolving around the earth, against a background of stars. The movement of the sun indicates the time of day, and the inner circle of the clock depicts the moon, rotating once a month. To the delight of visitors, every quarter of an hour, models of jousting knights rush round chasing each other, while a model of a man bangs bells with his heels.

Astronomical clocks were first made in China and in the Arab world, and powered by water. Their construction began in Europe around 1300, but with a new kind of mechanism, operated by weights and escapements. All these early clocks took for granted that the earth was at the centre of the universe. They were useful models for telling the time and for predicting the phases of the moon; but no one thought that the universe was really like a clockwork mechanism.

A change from the metaphor of the organism to the metaphor of the machine produced science as we know it: mechanical models of the universe were taken to represent the way the world actually worked. The movements of stars and planets were governed by impersonal mechanical principles, not by souls or spirits with their own lives and purposes.

In 1605, Johannes Kepler summarised his programme as follows: ‘My aim is to show that the celestial machine is to be likened not to a divine organism but rather to clockwork … Moreover I show how this physical conception is to be presented through calculation and geometry.’4 Galileo Galilei (1564–1642) agreed that ‘inexorable, immutable’ mathematical laws ruled everything.

The clock analogy was particularly persuasive because clocks work in a self-contained way. They are not pushing or pulling other objects. Likewise the universe performs its work by the regularity of its motions, and is the ultimate time-telling system. Mechanical clocks had a further metaphorical advantage: they were a good example of knowledge through construction, or knowing by doing. Someone who could construct a machine could reconstruct it. Mechanical knowledge was power.

The prestige of mechanistic science did not come primarily from its philosophical underpinnings but from its practical successes, especially in physics. Mathematical modelling typically involves extreme abstraction and simplification, which is easiest to realise with man-made machines or objects. Mathematical mechanics is impressively useful in dealing with relatively simple problems, such as the trajectories of cannon balls or rockets.

One paradigmatic example is billiard-ball physics, which gives a clear account of impacts and collisions of idealised billiard balls in a frictionless environment. Not only is the mathematics simplified, but billiard balls themselves are a very simplified system. The balls are made as round as possible and the table as flat as possible, and there are uniform rubber cushions at the sides of the table, unlike any natural environment. Think of a rock falling down a mountainside for comparison. Moreover, in the real world, billiard balls collide and bounce off each other in games, but the rules of the game and the skills and motives of the players are outside the scope of physics. The mathematical analysis of the balls’ behaviour is an extreme abstraction.

From living organisms to biological machines

The vision of mechanical nature developed amid devastating religious wars in seventeenth-century Europe. Mathematical physics was attractive partly because it seemed to provide a way of transcending sectarian conflicts to reveal eternal truths. In their own eyes the pioneers of mechanistic science were finding a new way of understanding the relationship of nature to God, with humans adopting a God-like mathematical omniscience, rising above the limitations of human minds and bodies. As Galileo put it:

![]()

When God produces the world, he produces a thoroughly mathematical structure that obeys the laws of number, geometrical figure and quantitative function. Nature is an embodied mathematical system.5

But there was a major problem. Most of our experience is not mathematical. We taste food, feel angry, enjoy the beauty of flowers, laugh at jokes. In order to assert the primacy of mathematics, Galileo and his successors had to distinguish between what they called ‘primary qualities’, which could be described mathematically, such as motion, size and weight, and ‘secondary qualities’, like colour and smell, which were subjective.6 They took the real world to be objective, quantitative and mathematical. Personal experience in the lived world was subjective, the realm of opinion and illusion, outside the realm of science.

René Descartes (1596–1650) was the principal proponent of the mechanical or mechanistic philosophy of nature. It first came to him in a vision on 10 November 1619 when he was ‘filled with enthusiasm and discovered the foundations of a marvellous science’.7 He saw the entire universe as a mathematical system, and later envisaged vast vortices of swirling subtle matter, the aether, carrying around the planets in their orbits.

Descartes took the mechanical metaphor much further than Kepler or Galileo by extending it into the realm of life. He was fascinated by the sophisticated machinery of his age, such as clocks, looms and pumps. As a youth he designed mechanical models to simulate animal activity, such as a pheasant pursued by a spaniel. Just as Kepler projected the image of man-made machinery onto the cosmos, Descartes projected it onto animals. They, too, were like clockwork.8 Activities like the beating of a dog’s heart, its digestion and breathing were programmed mechanisms. The same principles applied to human bodies.

Descartes cut up living dogs in order to study their hearts, and reported his observations as if his readers might want to replicate them: ‘If you slice off the pointed end of the heart of a live dog, and insert a finger into one of the cavities, you will feel unmistakably that every time the heart gets shorter it presses the finger, and every time it gets longer it stops pressing it.’9

He backed up his arguments with a thought experiment: first he imagined man-made automata that imitated the movements of animals, and then argued that if they were made well enough they would be indistinguishable from real animals:

![]()

If any such machines had the organs and outward shapes of a monkey or of some other animal that lacks reason, we should have no way of knowing that they did not possess entirely the same nature as those animals.10

With arguments like these, Descartes laid the foundations of mechanistic biology and medicine that are still orthodox today. However, the machine theory of life was less readily accepted in the seventeenth and eighteenth centuries than the machine theory of the universe. Especially in England, the idea of animal-machines was considered eccentric.11 Descartes’ doctrine seemed to justify cruelty to animals, including vivisection, and it was said that the test of his followers was whether they would kick their dogs.12

As the philosopher Daniel Dennett summarised it, ‘Descartes … held that animals were in fact just elaborate machines … It was only our non-mechanical, non-physical minds that make human beings (and only human beings) intelligent and conscious. This was actually a subtle view, most of which would readily be defended by zoologists today, but it was too revolutionary for Descartes’ contemporaries.’13

We are so used to the machine theory of life that it is hard to appreciate what a radical break Descartes made. The prevailing theories of his time took for granted that living organisms were organisms, animate beings with their own souls. Souls gave organisms their purposes and powers of self-organisation. From the Middle Ages right up into the seventeenth century, the prevailing theory of life taught in the universities of Europe followed the Greek philosopher Aristotle and his leading Christian interpreter, Thomas Aquinas (c. 1225–74), according to whom the matter in plant or animal bodies was shaped by the organisms’ souls. For Aquinas, the soul was the form of the body.14 The soul acted like an invisible mould that shaped the plant or the animal as it grew and attracted it towards its mature form.15

The souls of animals and plants were natural, not supernatural. According to classical Greek and medieval philosophy, and also in William Gilbert’s theory of magnetism, even magnets had souls.16 The soul within and around them gave them their powers of attraction and repulsion. When a magnet was heated and lost its magnetic properties, it was as if the soul had left it, just as the soul left an animal body when it died. We now talk in terms of magnetic fields. In most respects fields have replaced the souls of classical and medieval philosophy.17

Before the mechanistic revolution, there were three levels of explanation: bodies, souls and spirits. Bodies and souls were part of nature. Spirits were non-material but interacted with embodied beings through their souls. The human spirit, or ‘rational soul’, according to Christian theology, was potentially open to the Spirit of God.18

After the mechanistic revolution, there were only two levels of explanation: bodies and spirits. Three layers were reduced to two by removing souls from nature, leaving only the human ‘rational soul’ or spirit. The abolition of souls also separated humanity from all other animals, which became inanimate machines. The ‘rational soul’ of man was like an immaterial ghost in the machinery of the human body.

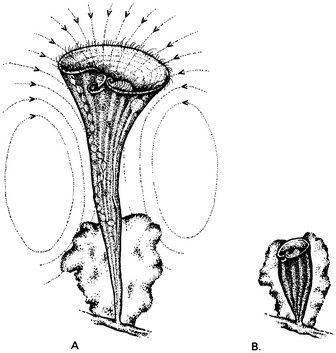

How could the rational soul possibly interact with the brain? Descartes speculated that their interaction occurred in the pineal gland.19 He thought of the soul as like a little man inside the pineal gland controlling the plumbing of the brain. He compared the nerves to water pipes, the cavities in the brain to storage tanks, the muscles to mechanical springs, and breathing to the movements of a clock. The organs of the body were like the automata in seventeenth-century water gardens, and the immaterial man within was like the fountain keeper:

![]()

External objects, which by their mere presence stimulate [the body’s] sense organs … are like visitors who enter the grottoes of these fountains and unwittingly cause the movements which take place before their eyes. For they cannot enter without stepping on certain tiles which are so arranged that if, for example, they approach a Diana who is bathing they will cause her to hide in the reeds. And finally, when a rational soul is present in this machine it will have its principal seat in the brain, and reside there like the fountain keeper who must be stationed at the tanks to which the fountain’s pipes return if he wants to produce, or prevent, or change their movements in some way.20

The final step in the mechanistic revolution was to reduce two levels of explanation to one. Instead of a duality of matter and mind, there is only matter. This is the doctrine of materialism, which came to dominate scientific thinking in the second half of the nineteenth century. Nevertheless, despite their nominal materialism, most scientists remained dualists, and continued to use dualistic metaphors.