|

The Body Electric |

|

|

|

The Body Electric tells the fascinating story of our bioelectric selves.

Robert O. Becker, a pioneer in the filed of regeneration and its relationship to electrical currents in living things, challenges the established mechanistic understanding of the body.

He found clues to the healing process in the long-discarded theory that electricity is vital to life.

But as exciting as Becker's discoveries are, pointing to the day when human limbs, spinal cords, and organs may be regenerated after they have been damaged, equally fascinating is the story of Becker's struggle to do such original work.

The Body Electric explores new pathways in our understanding of evolution, acupuncture, psychic phenomena, and healing.

To my wife, Lillian —R.O.B.

To Harwood Rhodes, from a grateful student — G.S.

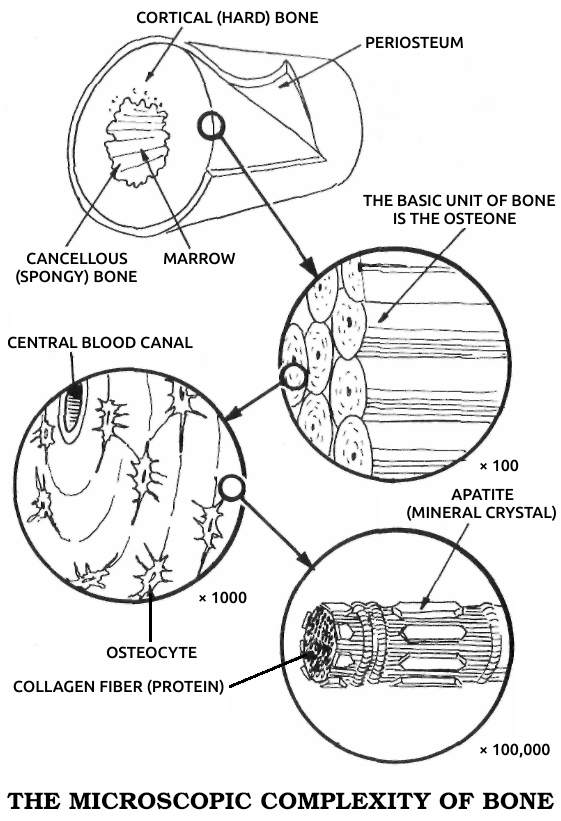

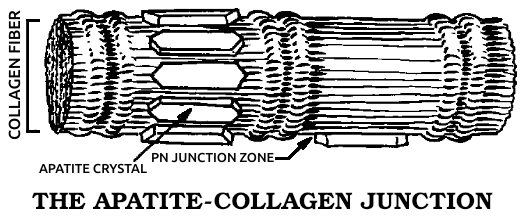

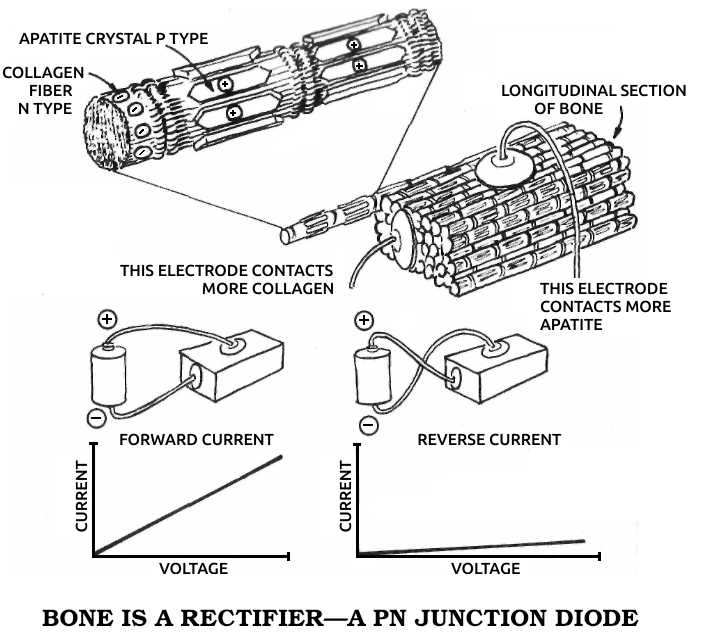

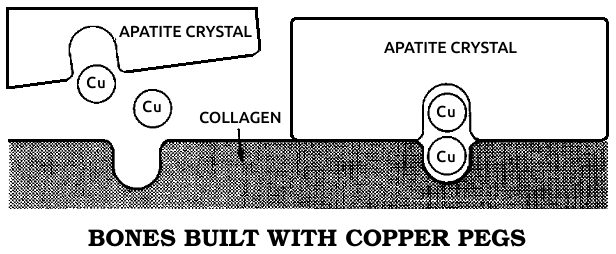

Apatite: The mineral fraction of bone, microscopic calcium phosphate crystals deposited on the preexisting collagen structure of the bone, making it hard. See also Cᴏʟʟᴀɢᴇɴ.

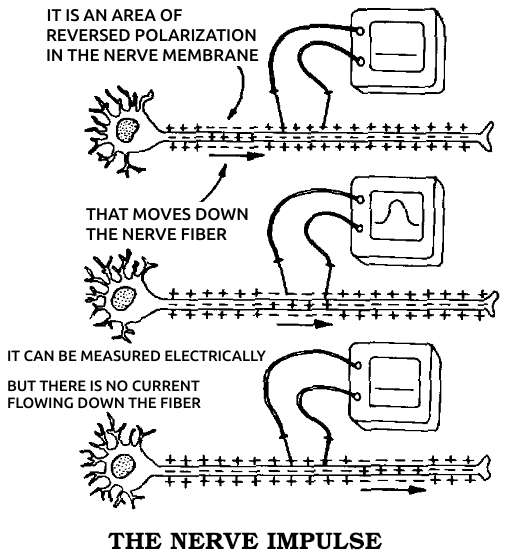

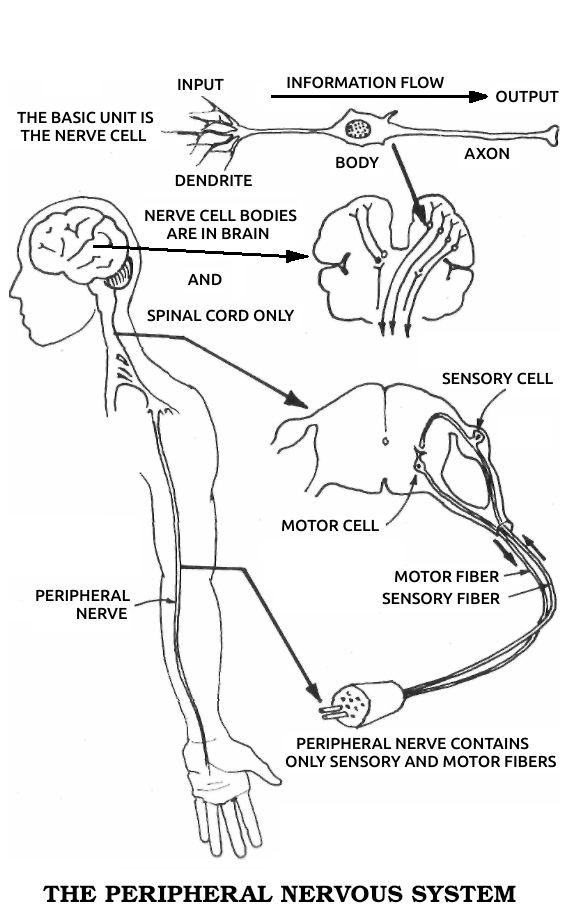

Axon: The prolongation of a nerve cell that carries a message, or stimulus, away from the cell body. For example, a motor nerve cell axon carries a contraction stimulus to a muscle. See also Dᴇɴᴅʀɪᴛᴇ, Nᴇᴜʀᴏɴ.

Base pair: An association between two of the four fundamental chemical groups that make up all DNA and RNA molecules. Base pairs are the smallest structures that form units of meaning in the genetic code. The more base pairs, the larger the molecule.

Biological cycles: Changes in the activities of living things in an ebb-and-flow pattern. Such changes occur in almost all physical aspects, including sleep-wakefulness, hormone levels, and numbers of white cells in the blood. The predominant pattern is approximately twenty-four hours and usually follows the lunar day closely. See also Cɪʀᴄᴀᴅɪᴀɴ ʀʜʏᴛʜᴍ.

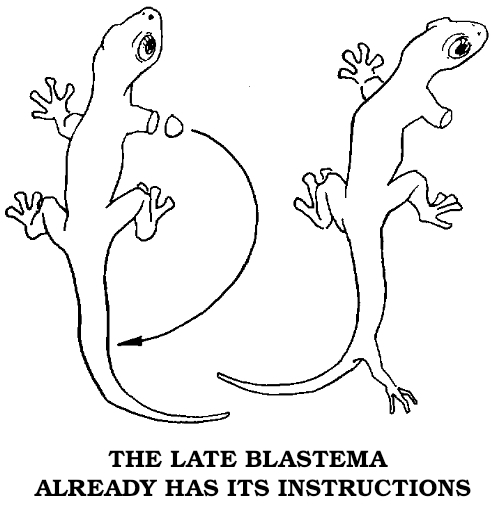

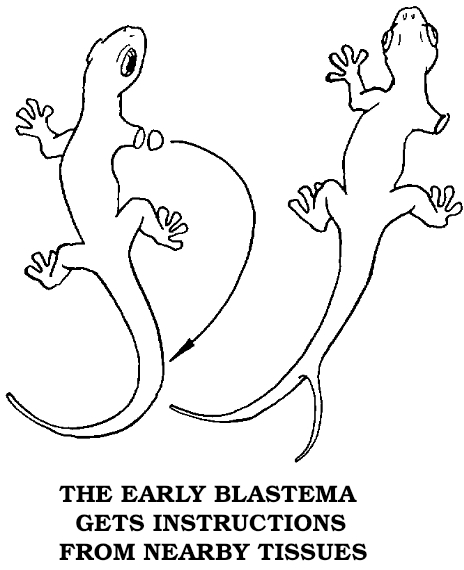

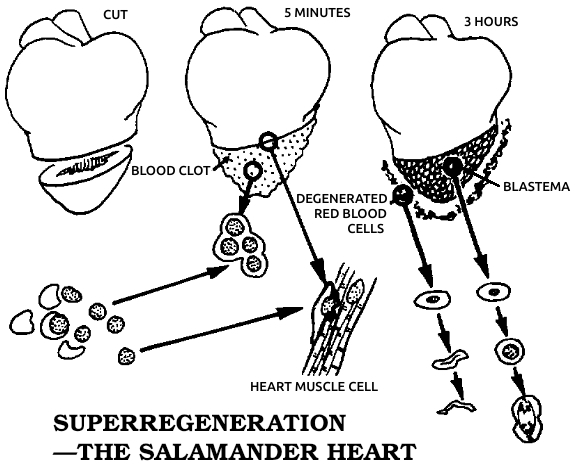

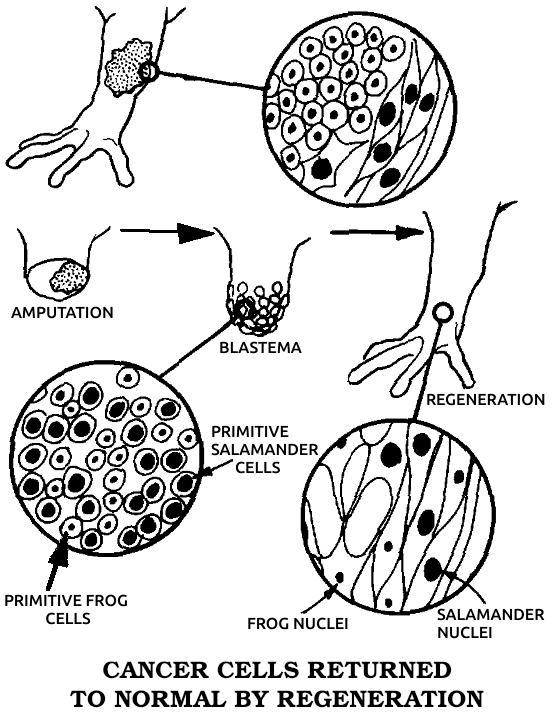

Blastema: The mass of primitive, unspecialized cells that appears at the site of an injury in animals that regenerate. Blastema cells specialize and form the replacement part.

Circadian rhythm: The predominant biological cycle of all living things, from Latin circa ("approximately") and dies ("a day"). See also Bɪᴏʟᴏɢɪᴄᴀʟ ᴄʏᴄʟᴇs.

Collagen: A protein that makes up most of the fibrous connective tissue that holds the body's parts together. Tendons, ligaments, and scar tissue are composed almost entirely of collagen. It also forms the basic structure of bone. See also Aᴘᴀᴛɪᴛᴇ.

Crystal lattice: The precise, orderly arrangement of atoms in a crystal, forming a netlike structure.

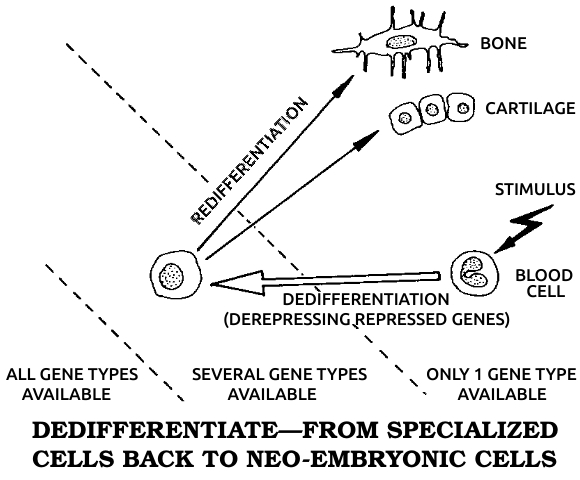

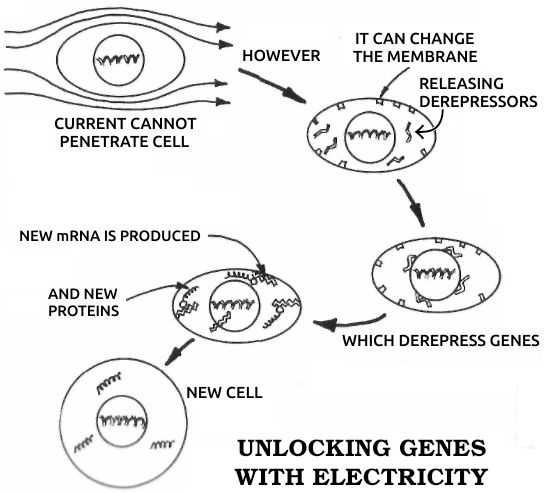

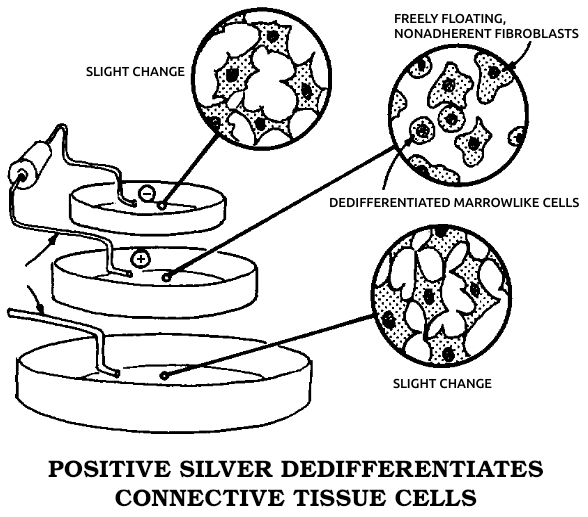

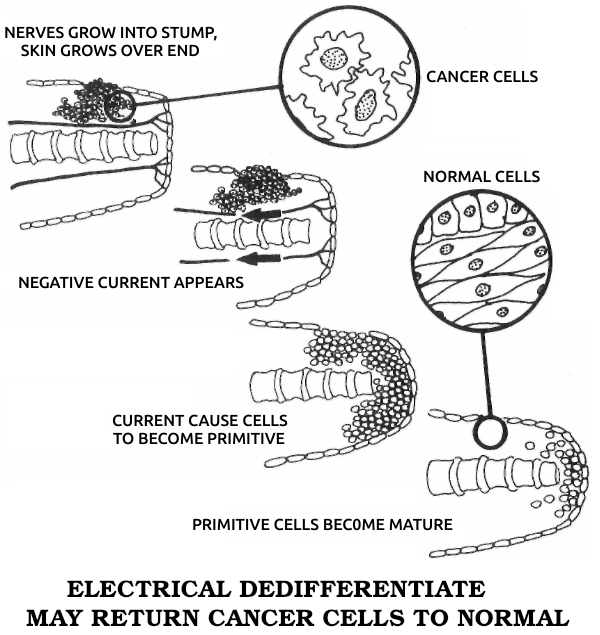

Dedifferentiation: The process in which a mature, specialized cell returns to its original, embryonic, unspecialized state. During dedifferentiation the genes that code for all other cell types are made available for use by derepressing them. See also Dɪғғᴇʀᴇɴᴛɪᴀᴛɪᴏɴ, Gᴇɴᴇ, Rᴇᴅɪғғᴇʀᴇɴᴛɪᴀᴛɪᴏɴ.

Dendrite: The prolongation of a nerve cell that carries a message, or stimulus, toward the cell body. For example, sensory nerve cell bodies receive stimuli from receptors in the skin via their dendrites. See also Axᴏɴ, Nᴇᴜʀᴏɴ.

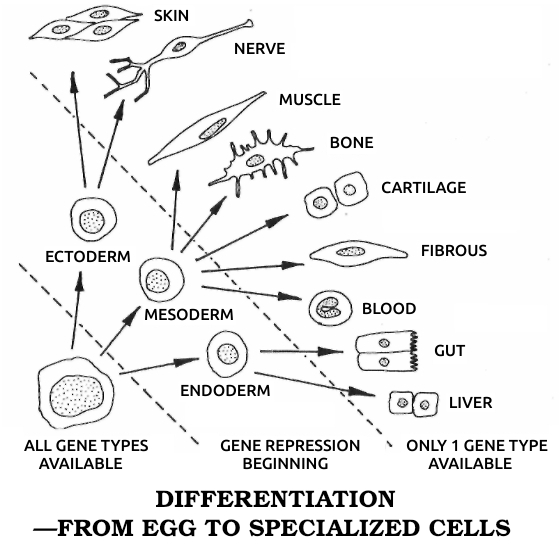

Differentiation: The process in which a cell matures from a simple embryonic type to a mature, specialized type in the adult. Differentiation involves restricting, or repressing, all genes for other cell types. See also Dᴇᴅɪғғᴇʀᴇɴᴛɪᴀᴛɪᴏɴ, Gᴇɴᴇ, Rᴇᴅɪғғᴇʀᴇɴᴛɪᴀᴛɪᴏɴ.

DNA: The molecule in cells that contains genetic information.

Ectoderm: One of three primary tissues in the embryo, formed as differentiation (cell specialization) is just beginning. The ectoderm gives rise to the skin and nervous system. See also Eɴᴅᴏᴅᴇʀᴍ, Mᴇsᴏᴅᴇʀᴍ.

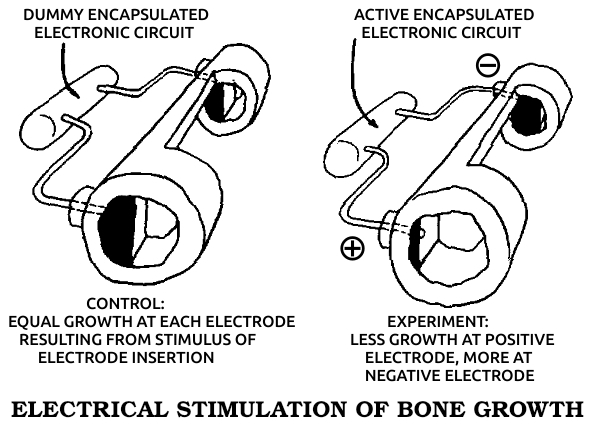

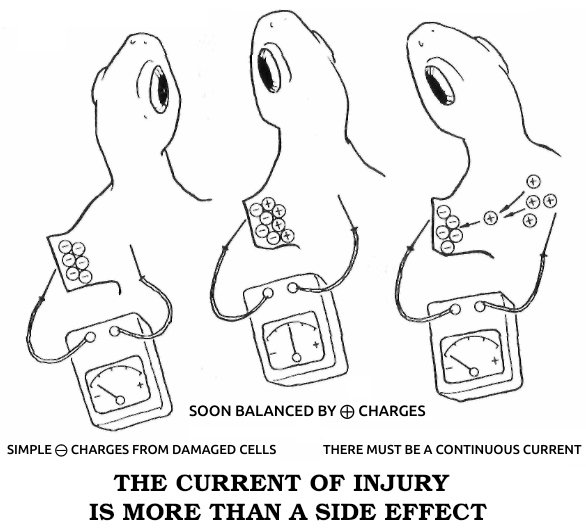

Electrode: A device, usually metal, that connects electronic equipment to a living organism for the purpose of measuring electrical currents or voltages in the organism, or delivering a measured electrical stimulus to the organism.

Electrolyte: Any chemical compound that, when dissolved in water, separates into charged atoms that permit the passage of electrical current through the solution.

Embryogenesis: The growth of a new individual from a fertilized egg to the moment of hatching or birth.

Endoderm: One of three primary tissues in the embryo, formed as differentiation (cell specialization) is beginning. It forms the digestive organs. See also Eᴄᴛᴏᴅᴇʀᴍ, Mᴇsᴏᴅᴇʀᴍ.

Epidermis: The outer layer of skin, having no blood vessels.

Epigenesis: The development of a complex organism from a simple, undifferentiated unit, such as the egg cell. It is the opposite of preformation, in which a complex organism was thought to develop from a smaller, but similarly complex, antecedent, such as the homunculus that some early biologists thought resided in the sperm or egg cell.

Epithelium: A general term for skin and for the lining of the digestive tract.

Exudate: Liquid, sometimes containing cells, that diffuses out from a wound or surface structure of a living organism. Examples are a wound exudate and the slime exudate from the skin of fish.

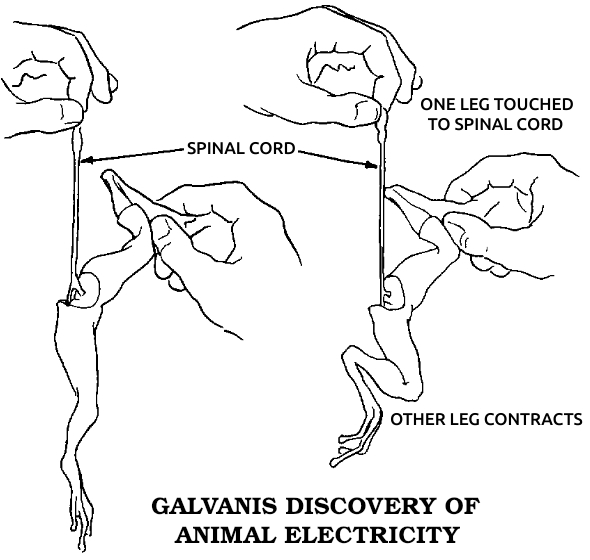

Galvanotaxis: The movement of a living organism toward or away from a source of electric current.

Gene: A portion of a DNA molecule structured so as to produce a specific effect in the cell.

Gene expression: Specific structure and activity of a cell in response to a group of genes coded for such activity. For example, genes coded for muscle cause a primitive cell to assume the structure and function of a muscle cell.

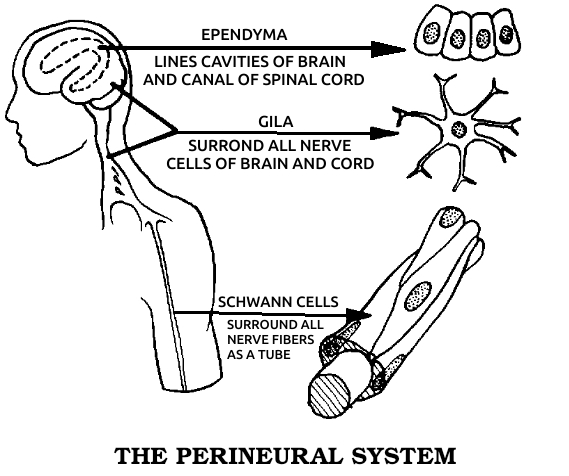

Glia: A tissue composed of a variety of cells, mostly glial cells, that makes up most of the nervous system. These cells have been considered nonneural in the sense that they cannot produce nerve impulses. Therefore they have been thought unable to transmit information, rather having protective and nutritive roles for the nerve cells proper. This concept is changing. It is now known that glial cells have electrical properties that, while not the same as nerve impulse transmission, enable them to play a role in communication in the body.

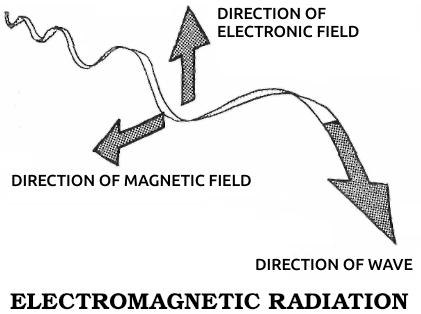

Hertz: Cycles per second, the unit for measuring the vibratory rate of electromagnetic radiation. Named for Heinrich Hertz, German physicist who made the first experimental discovery of radio waves in 1888.

Homeostasis: The ability of living organisms to maintain a constant "internal environment." For example, the human body maintains a constant amount of dissolved oxygen in the blood at all times by means of various mechanisms that sense the oxygen level and increase or decrease the breathing rate.

In vitro: An experiment done in a glass dish on part of a living organism.

In vivo: An experiment done on an intact, whole organism.

Magnetosphere: The area around the earth in which the planet's magnetic field exerts a stronger influence than the solar or interplanetary magnetic field. It extends some 30,000 to 50,000 miles from the earth's surface. A prominent feature of the magnetosphere is the Van Allen belts, areas of charged particles trapped by the earth's magnetic field.

Magnetotactic: Active movement toward a magnetic pole.

Mesoderm: One of three primary tissue layers in the embryo, which develop as differentiation (cell specialization) begins. It becomes the muscular and circulatory system in the adult.

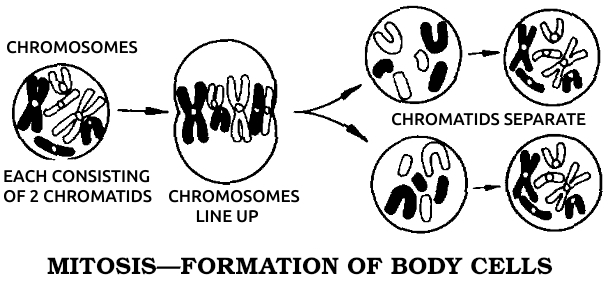

Mitosis: Cell division. Actual division takes only a few minutes but must be preceded by a much longer period during which preparatory events, such as duplication of DNA, take place. The entire process generally takes about one day.

Neoblast: An unspecialized embryonic cell retained in the adult bodies of certain primitive animals and called to the site of an injury to take part in regenerative healing.

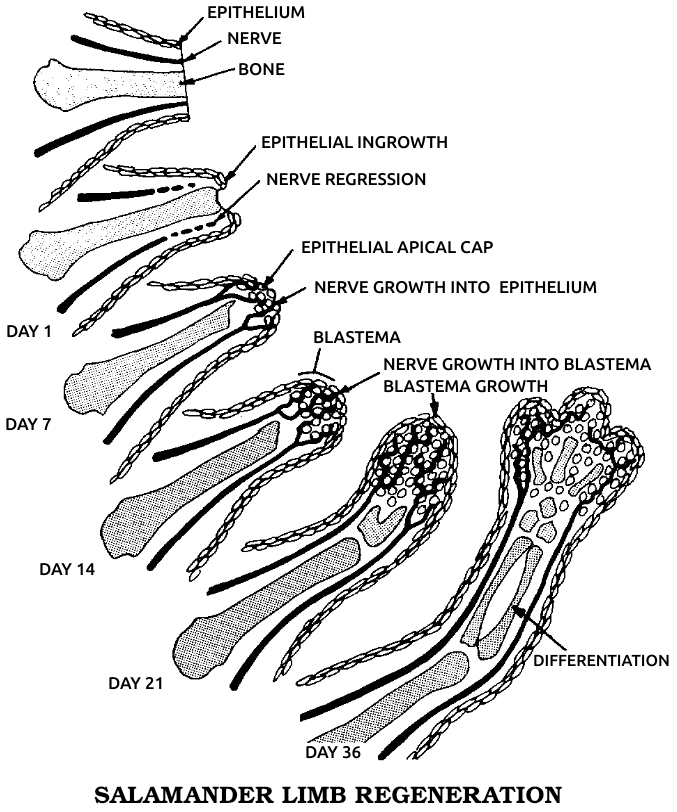

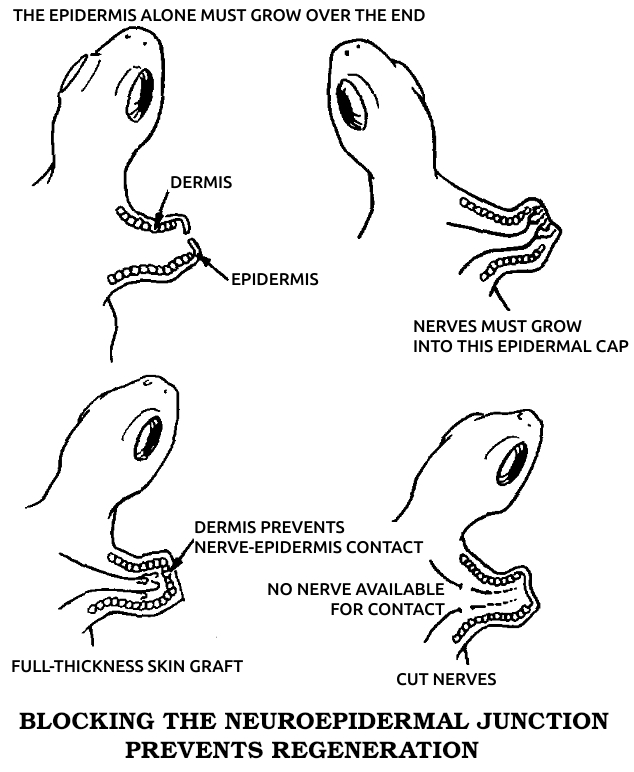

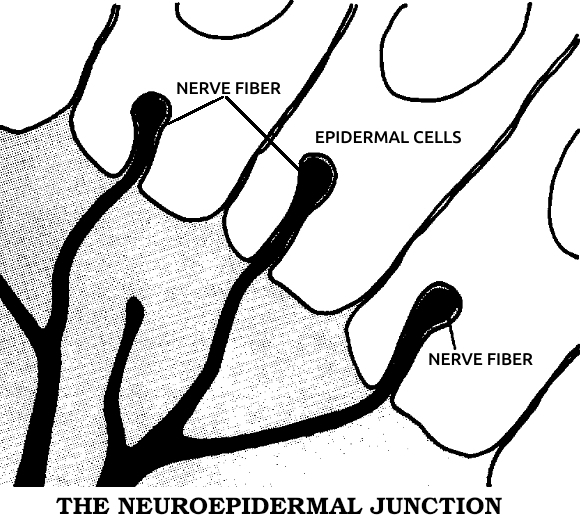

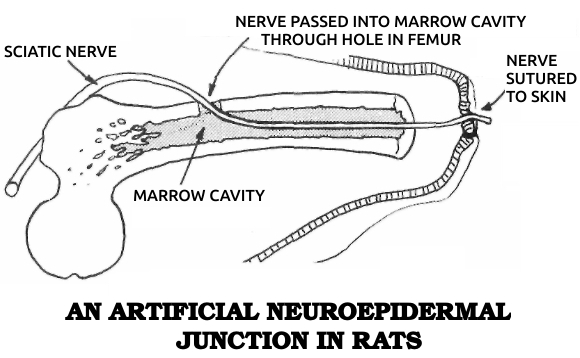

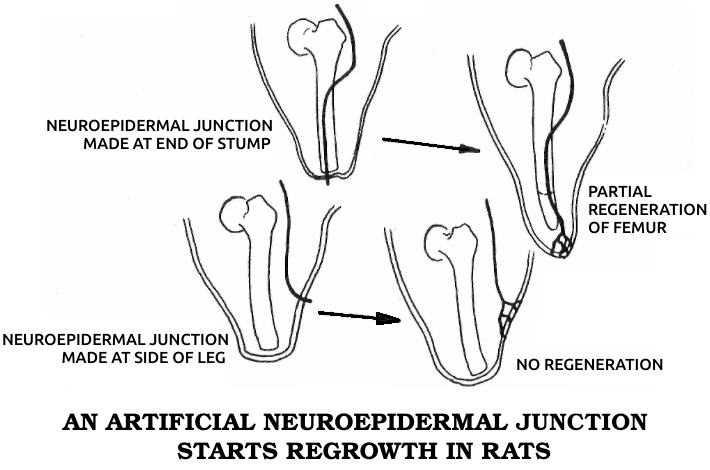

Neuroepidermal junction: A structure formed from the union of skin and nerve fibers at the site of tissue loss in animals capable of regeneration. It produces the specific electrical currents that bring about the subsequent regeneration.

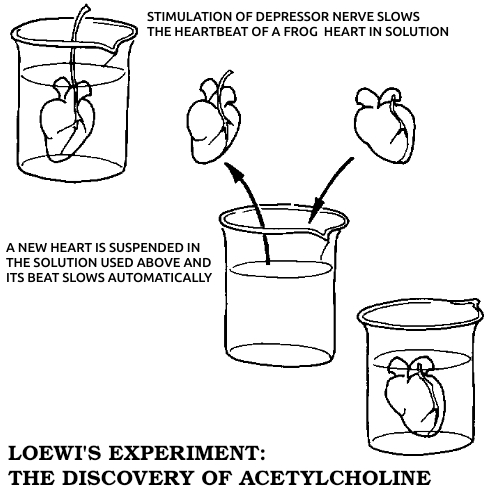

Neurohormone: A chemical produced by nerve cells that has effects on other nerve cells or other parts of the body.

Neuron, or neurone: A nerve cell.

Neurotransmitter: A chemical used to carry the nerve impulse across the synapse.

Osteoblast: A cell that forms bone by producing the specific type of collagen that forms bone's underlying structure.

Osteogenesis: The formation of new bone, whether in embryogenesis, postnatal development, or fracture healing.

PEMF: Pulsed electromagnetic field.

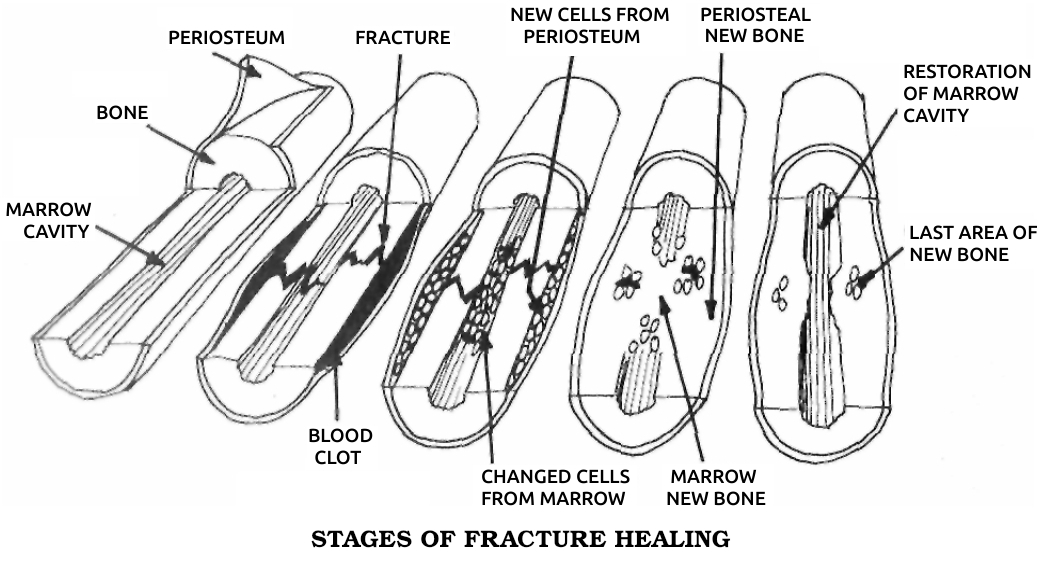

Periosteum: A layer of tough, fibrous collagen that surrounds each bone. It contains cells that turn into osteoblasts during fracture healing.

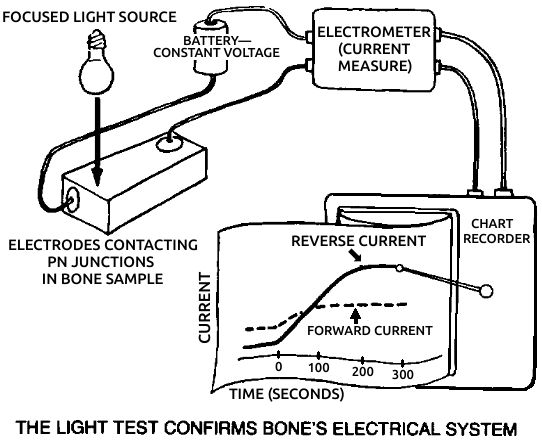

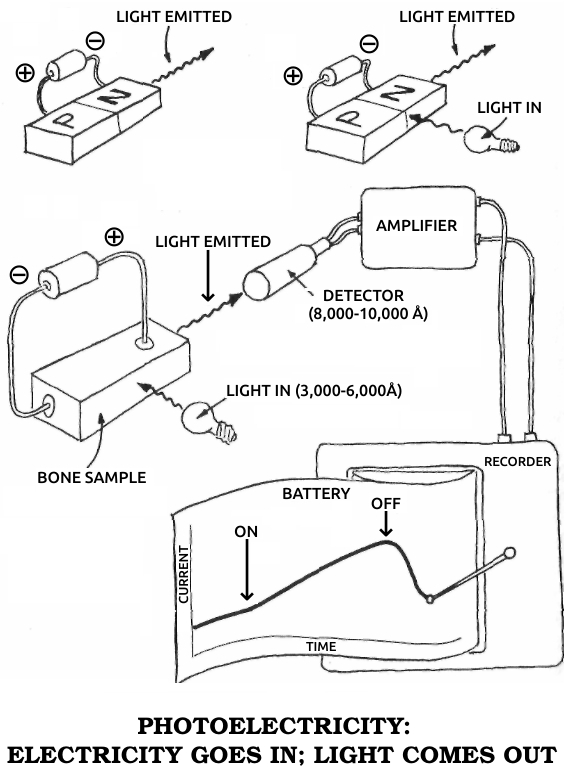

Photoelectric material: A substance that changes light into electrical energy, producing an electric current when light shines on it.

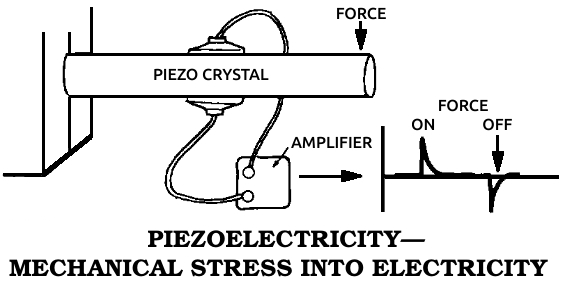

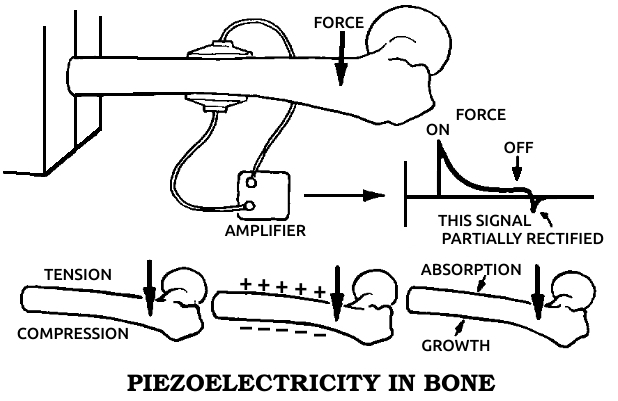

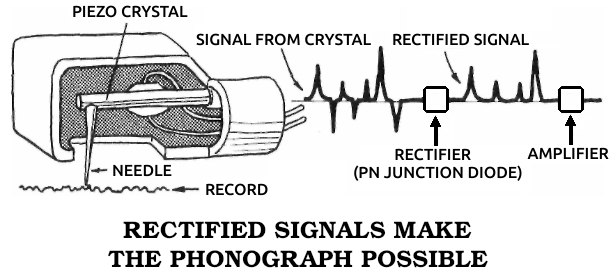

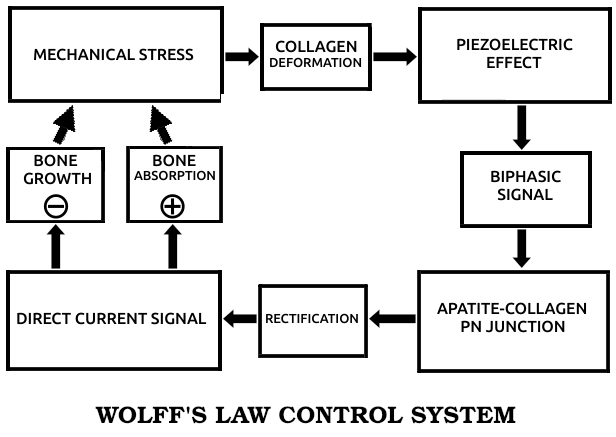

Piezoelectric material: A substance that changes mechanical stress into electrical energy, producing an electrical current when deformed by pressure or bending.

Potential: Another term for voltage, which may at times be limited to a voltage that exists without a current but is potentially able to cause a current to flow if a circuit is completed.

Preformation: See Eᴘɪɢᴇɴᴇsɪs.

Pyroelectric material: A substance that changes thermal energy into electrical energy, producing an electric current when heated.

Redifferentiation: The process in which a previously mature cell that has dedifferentiated becomes a mature, specialized cell again. See also Dᴇᴅɪғғᴇʀᴇɴᴛɪᴀᴛɪᴏɴ, Dɪғғᴇʀᴇɴᴛɪᴀᴛɪᴏɴ.

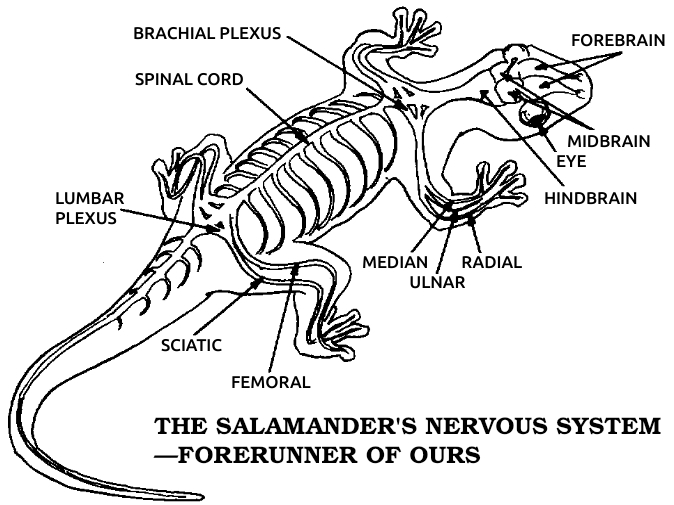

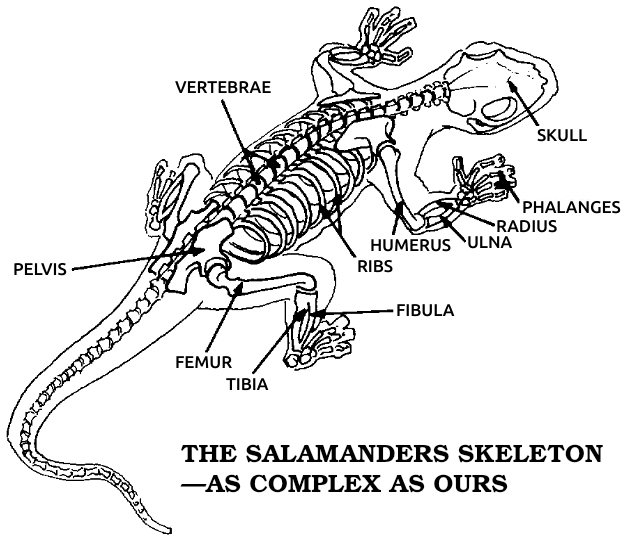

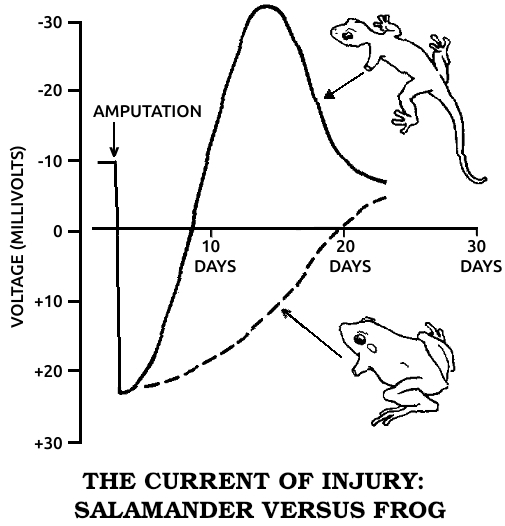

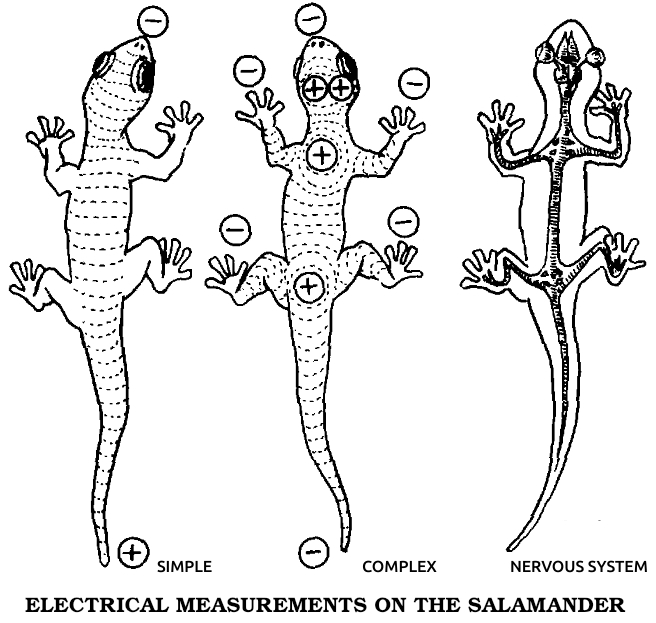

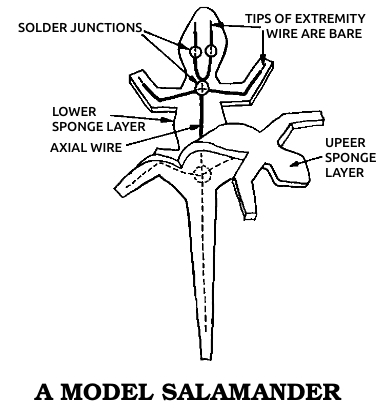

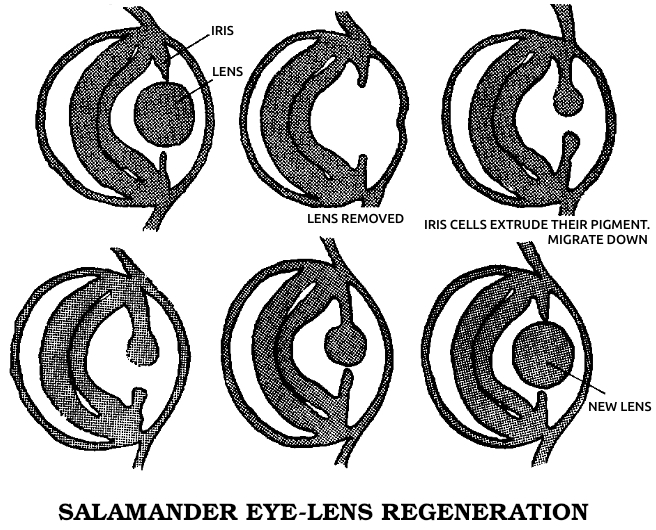

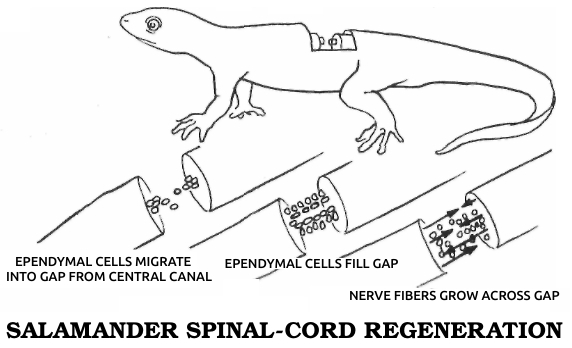

Salamander: Any of a group of amphibians related to frogs but retaining a tail throughout their lives. Salamanders live in water or moist environments. Most are 2 to 3 inches long, but some grow to more than a foot in length. Since salamanders are vertebrates, with an anatomy similar to ours, and since they regenerate many parts of their bodies very well, they are the animals most commonly used in regeneration research.

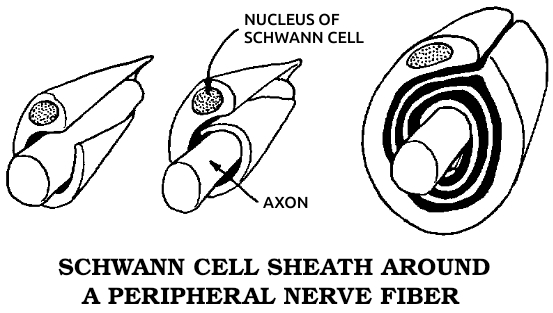

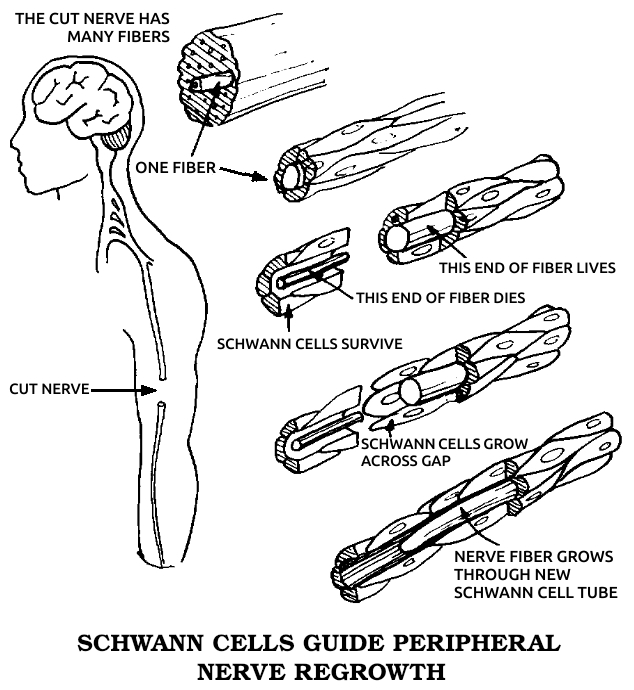

Schwann cells: The cells that surround all of the nerves outside of the brain and spinal cord. See also Gʟɪᴀ.

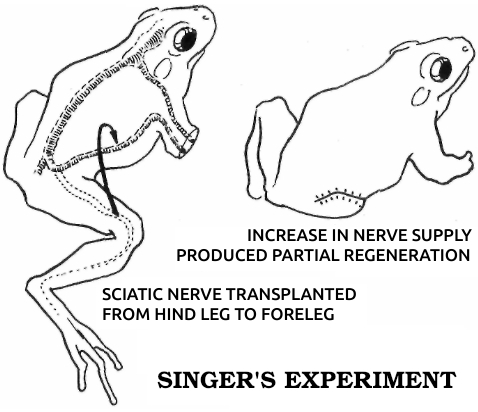

Sciatic nerve: The main nerve in the leg. It includes both motor nerve fibers carrying impulses to the leg muscles and sensory nerve fibers carrying impulses to the brain.

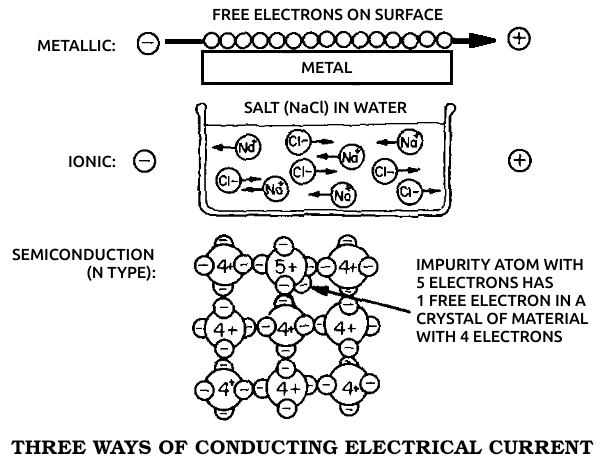

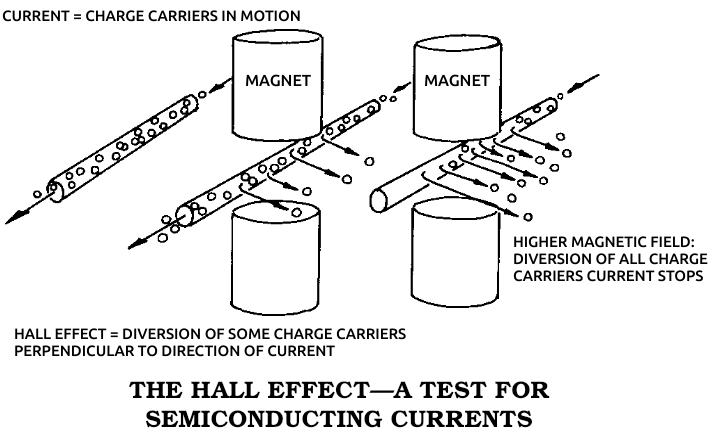

Semiconduction: The conduction of electrical current by the movement of electrons or "holes" (the absence of electrons) through a crystal lattice. It is the third and most recently discovered method of electrical conduction. The others are, metallic conduction, which works by means of electrons traveling along a wire, and ionic conduction, which works by movement of charged atoms (ions) in an electrolyte. Semiconductors conduct less current than metals but are far more versatile than either of the other types of conduction. Thus they are the basic materials of the transistors and integrated circuits used in most electronic devices today.

Superconduction: The conduction of an electrical current by a specific material that under certain circumstances (generally very low temperatures) offers no resistance to the flow. Such a current will continue undiminished as long as the necessary circumstances are maintained.

Synapse: The junction between one nerve cell and another, or between a nerve cell and some other cell. See also Nᴇᴜʀᴏᴛʀᴀɴsᴍɪᴛᴛᴇʀ.

Undifferentiated: Unspecialized, a term applied to cells that are in a primitive or embryonic state. See also Dᴇᴅɪғғᴇʀᴇɴᴛɪᴀᴛɪᴏɴ, Dɪғғᴇʀᴇɴᴛɪᴀᴛɪᴏɴ, Rᴇᴅɪғғᴇʀᴇɴᴛɪᴀᴛɪᴏɴ.

Vertebrate: Any of the animals that have backbones, including all fish, amphibians, reptiles, birds, and mammals. All vertebrates share the same basic anatomical arrangement, with a backbone, four extremities, and similar construction of the muscular, nervous, and circulatory systems.

| Introduction Part 1 Chapter 1 Chapter 2 Chapter 3 Part 2 Chapter 4 Chapter 5 Chapter 6 Chapter 7 Part 3 Chapter 8 Chapter 9 Chapter 10 Chapter 11 Chapter 12 Part 4 Chapter 13 Chapter 14 Chapter 15 Postscript |

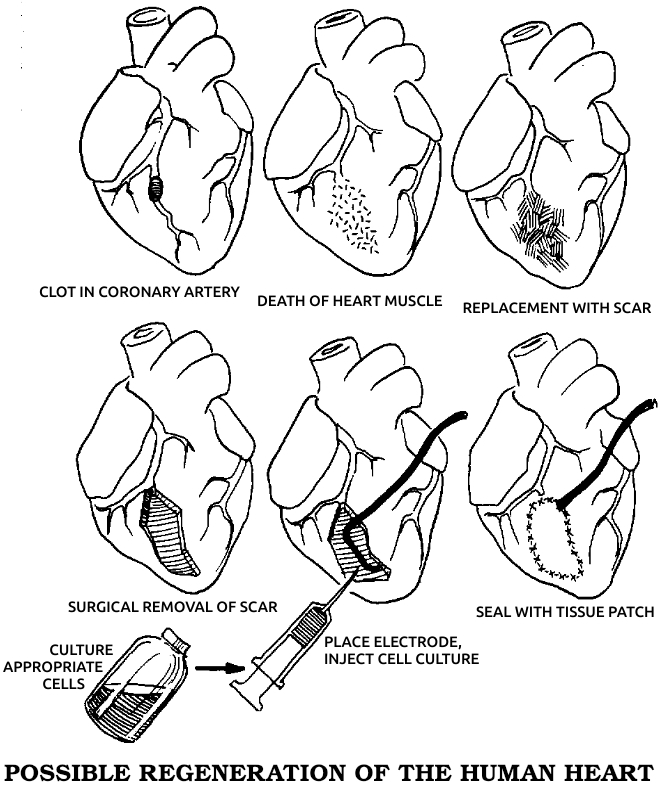

Dedication … ii Glossary … iii The Promise of the Art … v Acknowledgments … vi About the Author … vii Growth and Regrowth … 1 Hydra's Heads and Medusa's Blood …2 Failed Healing in Bone …3 A Fable Made Fact … 4 The Embryo at the Wound … 5 Mechanics of Growth … 6 Control Problems … 7 Nerve Connections … 8 Vital Electricity … 9 The Sign of the Miracle … 10 The Tribunal … 11 The Reversals … 12 The Stimulating Current … 13 Life's Potentials … 14 Unpopular Science … 15 Undercurrents in Neurology … 16 Conducting in a New Mode … 17 Testing the Concept … 18 The Circuit of Awareness … 19 Closing the Circle … 20 The Artifact Man and a Friend in Deed … 21 The Electromagnetic Brain … 22 The Ticklish Gene … 23 The Pillars of the Temple … 24 The Inner Electronics of Bone … 25 A Surprise in the Blood … 26 Do-It-Yourself Dedifferentiation … 27 The Genetic Key … 28 Good News for Mammals … 29 A First Step with a Rat Leg … 30 Childhood Powers, Adult Prospects … 31 Our Hidden Healing Energy … 32 The Silver Wand … 33 Minus for Growth, Plus for Infection … 34 Positive Surprises … 35 The Fracture Market … 36 The Organ Tree … 37 Cartilage … 38 Skull Bones … 39 Eyes … 40 Muscle … 41 Abdominal Organs … 42 The Lazarus Heart … 43 The Five-Alarm Blastema … 44 The Self-Mending Net … 45 Peripheral Nerves … 46 The Spinal Cord … 47 The Brain … 48 Righting a Wrong Turn … 49 A Reintegrative Approach … 50 The Essence of Life … 51 The Missing Chapter … 52 The Constellation of the Body … 53 Unifying Pathways … 54 Breathing with the Earth … 55 The Attractions of Home … 56 The Face of the Deep … 57 Crossroads of Evolution … 58 Hearing Without Ears … 59 Maxwell's Silver Hammer … 60 Subliminal Stress … 61 Power Versus People … 62 Fatal Locations … 63 The Central Nervous System … 64 The Endocrine, Metabolic, and Cardiovascular Systems … 65 Growth Systems and Immune Response … 66 Conflicting Standards … 67 Invisible Warfare … 68 Critical Connections … 69 Political Science … 70 |

I remember how it was before penicillin. I was a medical student at the end of World War II, before the drug became widely available for civilian use, and I watched the wards at New York’s Bellevue Hospital fill to overflowing each winter. A veritable Byzantine city unto itself, Bellevue sprawled over four city blocks, its smelly, antiquated buildings jammed together at odd angles and interconnected by a rabbit warren of underground tunnels. In wartime New York, swollen with workers, sailors, soldiers, drunks, refugees, and their diseases from all over the world, it was perhaps the place to get an all-inclusive medical education. Bellevue’s charter decreed that, no matter how full it was, every patient who needed hospitalization had to be admitted. As a result, beds were packed together side by side, first in the aisles, then out into the corridor. A ward was closed only when it was physically impossible to get another bed out of the elevator.

Most of these patients had lobar (pneumococcal) pneumonia. It didn’t take long to develop; the bacteria multiplied unchecked, spilling over from the lungs into the bloodstream, and within three to five days of the first symptom the crisis came. The fever rose to 104 or 105 degrees Fahrenheit and delirium set in. At that point we had two signs to go by: If the skin remained hot and dry, the victim would die; sweating meant the patient would pull through. Although sulfa drugs often were effective against the milder pneumonias, the outcome in severe lobar pneumonia still depended solely on the struggle between the infection and the patient’s own resistance. Confident in my new medical knowledge, I was horrified to find that we were powerless to change the course of this infection in any way.

It’s hard for anyone who hasn’t lived through the transition to realize the change that penicillin wrought. A disease with a mortality rate near 50 percent, that killed almost a hundred thousand Americans each year, that struck rich as well as poor and young as well as old, and against which we’d had no defense, could suddenly be cured without fail in a few hours by a pinch of white powder. Most doctors who have graduated since 1950 have never even seen pneumococcal pneumonia in crisis.

Although penicillin’s impact on medical practice was profound, its impact on the philosophy of medicine was even greater. When Alexander Fleming noticed in 1928 that an accidental infestation of the mold Penicillium notatum had killed his bacterial cultures, he made the crowning discovery of scientific medicine. Bacteriology and sanitation had already vanquished the great plagues. Now penicillin and subsequent antibiotics defeated the last of the invisibly tiny predators.

The drugs also completed a change in medicine that had been gathering strength since the nineteenth century. Before that time, medicine had been an art. The masterpiece—a cure—resulted from the patient’s will combined with the physician’s intuition and skill in using remedies culled from millennia of observant trial and error. In the last two centuries medicine more and more has come to be a science, or more accurately the application of one science, namely biochemistry. Medical techniques have come to be tested as much against current concepts in biochemistry as against their empirical results. Techniques that don’t fit such chemical concepts—even if they seem to work—have been abandoned as pseudoscientific or downright fraudulent.

At the same time and as part of the same process, life itself came to be denned as a purely chemical phenomenon. Attempts to find a soul, a vital spark, a subtle something that set living matter apart from the nonliving, had failed. As our knowledge of the kaleidoscopic activity within cells grew, life came to be seen as an array of chemical reactions, fantastically complex but no different in kind from the simpler reactions performed in every high school lab. It seemed logical to assume that the ills of our chemical flesh could be cured best by the right chemical antidote, just as penicillin wiped out bacterial invaders without harming human cells. A few years later the decipherment of the DNA code seemed to give such stout evidence of life’s chemical basis that the double helix became one of the most hypnotic symbols of our age. It seemed the final proof that we’d evolved through 4 billion years of chance molecular encounters, aided by no guiding principle but the changeless properties of the atoms themselves.

The philosophical result of chemical medicine’s success has been belief in the Technological Fix. Drugs became the best or only valid treatments for all ailments. Prevention, nutrition, exercise, lifestyle, the patient’s physical and mental uniqueness, environmental pollutants—all were glossed over. Even today, after so many years and millions of dollars spent for negligible results, it’s still assumed that the cure for cancer will be a chemical that kills malignant cells without harming healthy ones. As surgeons became more adept at repairing bodily structures or replacing them with artificial parts, the technological faith came to include the idea that a transplanted kidney, a plastic heart valve, or a stainless-steel-and-Teflon hip joint was just as good as the original—or even better, because it wouldn’t wear out as fast. The idea of a bionic human was the natural outgrowth of the rapture over penicillin. If a human is merely a chemical machine, then the ultimate human is a robot.

No one who’s seen the decline of pneumonia and a thousand other infectious diseases, or has seen the eyes of a dying patient who’s just been given another decade by a new heart valve, will deny the benefits of technology. But, as most advances do, this one has cost us something irreplaceable: medicine’s humanity. There’s no room in technological medicine for any presumed sanctity or uniqueness of life. There’s no need for the patient’s own self-healing force nor any strategy for enhancing it. Treating a life as a chemical automaton means that it makes no difference whether the doctor cares about—or even knows—the patient, or whether the patient likes or trusts the doctor.

Because of what medicine left behind, we now find ourselves in a real technological fix. The promise to humanity of a future of golden health and extended life has turned out to be empty. Degenerative diseases— heart attacks, arteriosclerosis, cancer, stroke, arthritis, hypertension, ulcers, and all the rest—have replaced infectious diseases as the major enemies of life and destroyers of its quality. Modern medicine’s incredible cost has put it farther than ever out of reach of the poor and now threatens to sink the Western economies themselves. Our cures too often have turned out to be double-edged swords, later producing a secondary disease; then we search desperately for another cure. And the dehumanized treatment of symptoms rather than patients has alienated many of those who can afford to pay. The result has been a sort of medical schizophrenia in which many have forsaken establishment medicine in favor of a holistic, prescientific type that too often neglects technology’s real advantages but at least stresses the doctor-patient relationship, preventive care, and nature’s innate recuperative power.

The failure of technological medicine is due, paradoxically, to its success, which at first seemed so overwhelming that it swept away all aspects of medicine as an art. No longer a compassionate healer working at the bedside and using heart and hands as well as mind, the physician has become an impersonal white-gowned ministrant who works in an office or laboratory. Too many physicians no longer learn from their patients, only from their professors. The breakthroughs against infections convinced the profession of its own infallibility and quickly ossified its beliefs into dogma. Life processes that were inexplicable according to current biochemistry have been either ignored or misinterpreted. In effect, scientific medicine abandoned the central rule of science—revision in light of new data. As a result, the constant widening of horizons that has kept physics so vital hasn’t occurred in medicine. The mechanistic assumptions behind today’s medicine are left over from the turn of the century, when science was forcing dogmatic religion to see the evidence of evolution. (The re-eruption of this same conflict today shows that the battle against frozen thinking is never finally won.) Advances in cybernetics, ecological and nutritional chemistry, and solid-state physics haven’t been integrated into biology. Some fields, such as parapsychology, have been closed out of mainstream scientific inquiry altogether. Even the genetic technology that now commands such breathless admiration is based on principles unchallenged for decades and unconnected to a broader concept of life. Medical research, which has limited itself almost exclusively to drug therapy, might as well have been wearing blinders for the last thirty years.

It’s no wonder, then, that medical biology is afflicted with a kind of tunnel vision. We know a great deal about certain processes, such as the genetic code, the function of the nervous system in vision, muscle movement, blood clotting, and respiration on both the somatic and the cellular levels. These complex but superficial processes, however, are only the tools life uses for its survival. Most biochemists and doctors aren’t much closer to the “truth” about life than we were three decades ago. As Albert Szent-Györgyi, the discoverer of vitamin C, has written, “We know life only by its symptoms.” We understand virtually nothing about such basic life functions as pain, sleep, and the control of cell differentiation, growth, and healing. We know little about the way every organism regulates its metabolic activity in cycles attuned to the fluctuations of earth, moon, and sun. We are ignorant about nearly every aspect of consciousness, which may be broadly defined as the self-interested integrity that lets each living thing marshal its responses to eat, thrive, reproduce, and avoid danger by patterns that range from the tropisms of single cells to instinct, choice, memory, learning, individuality, and creativity in more complex life-forms. The problem of when to “pull the plug” shows that we don’t even know for sure how to diagnose death. Mechanistic chemistry isn’t adequate to understand these enigmas of life, and it now acts as a barrier to studying them. Erwin Chargaff, the biochemist who discovered base pairing in DNA and thus opened the way for understanding gene structure, phrased our dilemma precisely when he wrote of biology, “No other science deals in its very name with a subject that it cannot define.”

Given the present climate, I’ve been a lucky man. I haven’t been a good, efficient doctor in the modern sense. I’ve spent far too much time on a few incurable patients whom no one else wanted, trying to find out how our ignorance failed them. I’ve been able to tack against the prevailing winds of orthodoxy and indulge my passion for experiment. In so doing I’ve been part of a little-known research effort that has made a new start toward a definition of life.

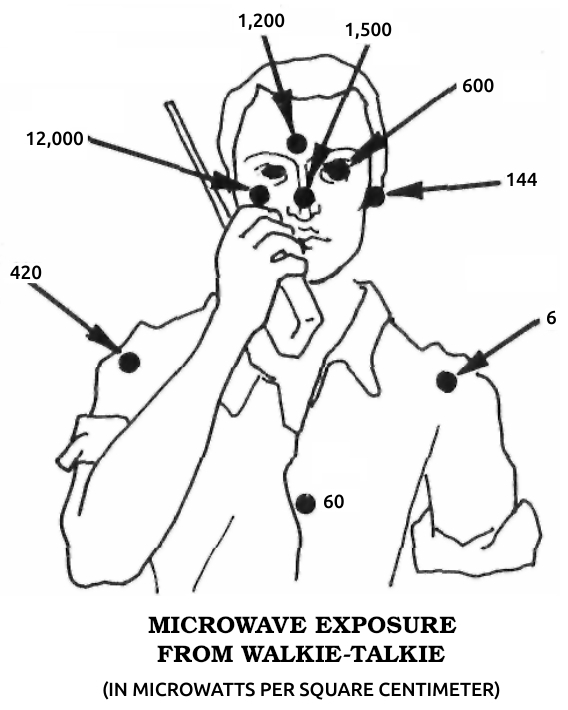

My research began with experiments on regeneration, the ability of some animals, notably the salamander, to grow perfect replacements for parts of the body that have been destroyed. These studies, described in Part 1, led to the discovery of a hitherto unknown aspect of animal life—the existence of electrical currents in parts of the nervous system. This breakthrough in turn led to a better understanding of bone fracture healing, new possibilities for cancer research, and the hope of human regeneration—even of the heart and spinal cord—in the not too distant future, advances that are discussed in Parts 2 and 3. Finally, a knowledge of life’s electrical dimension has yielded fundamental insights (considered in Part 4) into pain, healing, growth, consciousness, the nature of life itself, and the dangers of our electromagnetic technology.

I believe these discoveries presage a revolution in biology and medicine. One day they may enable the physician to control and stimulate healing at will. I believe this new knowledge will also turn medicine in the direction of greater humility, for we should see that whatever we achieve pales before the self-healing power latent in all organisms. The results set forth in the following pages have convinced me that our understanding of life will always be imperfect. I hope this realization will make medicine no less a science, yet more of an art again. Only then can it deliver its promised freedom from disease.

We wish to thank our wives, Lillian Becker and Maureen Sugden, without whose love, help, and patience this book would never have been completed. We also wish to acknowledge the contributions of editor Maria Guarnaschelli and copy editor Bruce Giffords, as well as Julie Weiner, the editor who first saw promise in this project, and Susan Schiefelbein, who began a draft of the work several years ago. In addition, we are grateful to friends, colleagues, researchers, and sources too numerous to list. To those not mentioned in the text we hereby offer our heartfelt thanks.

Co-author's Note

Bob Becker spent almost thirty years working on the substance of this book. I spent not quite two in helping to organize the material and fit the words together. Therefore I have chosen to tell the entire story from his point of view. Unless otherwise noted, "I" refers to him and "we" to him and his collaborators in research.

— Gary Selden

|

Robert Otto Becker (May 31, 1923–May 14, 2008) was a U.S. orthopedic surgeon who is best known for his research in biocybernetics. He spent his entire career at the Veterans Administration Hospital, Syracuse, New York, where he served as chief of orthopedic surgery, chief of research, and head of a research laboratory devoted to studying the role of bioelectrical phenomena in growth and healing, tissue regeneration, infection control, and the health impact of artificial environmental electromagnetic energy.

|

||||||||||||||||||||||||

|

Robert Otto Becker was born in 1923 in River Edge, New Jersey, and raised in Valley Stream, New York, where his father Otto Julius Becker served as the pastor of St. Paul’s Lutheran Church; his mother was Elizabeth Blanck Becker. In 1941 he entered Gettysburg College in Pennsylvania, where he majored in biology and performed his first experiments on salamander regeneration. He served in the army from 1942 to 1946; when he completed his bachelor’s degree he entered medical school at New York University, and met and married Lillian Moller, a fellow student. He obtained his medical degree in 1948, interned for a year, and for the next seven years studied pathology, surgery, and orthopedic surgery; for two of those years he was a medical officer in the army. He chose to specialize in orthopedic surgery, and his training took place mostly at the Veterans Administration hospital in Brooklyn.

The Veterans Administration offered Becker the opportunity to do supported research as well as clinical medicine, and in 1956 he became the chief of orthopedics at the Veterans Administration hospital in Syracuse, NY. The job was generally regarded as unattractive for a physician, but he accepted it in exchange for the resources and freedom to do research. He also became an adjunct professor at the State University of New York, on the same campus as the hospital.

Becker was interested in the medically significant problem of how the body regulated growth and healing such that the processes started and stopped as appropriate for the host, and produced exactly the kind of tissue needed. He was influenced by the cybernetic concepts of Norbert Wiener, the biological theories of Rene Dubos, the ideas of Peter Medawar, the experimental observations of Harold Burr, and the theories of Albert Szent-Gyorgi; he adapted their work to his interest in how biological processes were controlled.

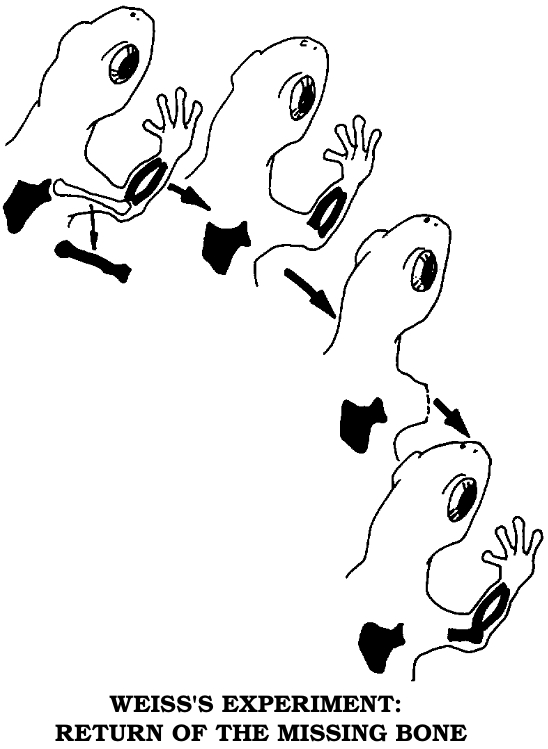

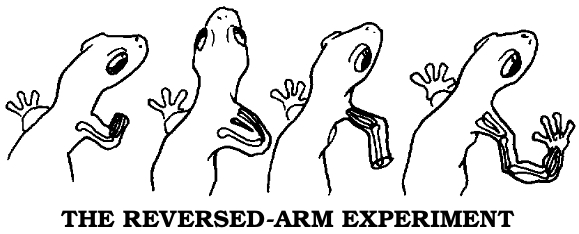

From the outset, Becker’s research was novel and controversial. His biocybernetic approach to the study of growth-related phenomena differed from the orthodox approach based on biochemistry. In each area where he pursued biocybernetic models he encountered criticism from established researchers who favored models based on reductionism. His critics included W. Ross Adey in the area of public health, Lionel Jaffe in limb regeneration, C. Andrew Bassett in side-effects of electrical stimulation, Philip Handler in interpretation of animal studies, Paul Weiss in the role of cellular dedifferentiation, and Morris Shamos in the biophysics of bone.

Becker’s initial research studies were well received as evidenced by a series of fourteen publications in experimental biology published in prestigious journals during a four-year period in the early 1960s. In 1964 he won the William A. Middleton Award, given by the Veterans Administration to the scientist who produced the most outstanding research. The same year he was appointed a Medical Investigator at the Veterans Administration, a distinction he held until 1976.

He believed that it was the duty of a taxpayer-funded researcher to speak directly to laypersons regarding his research results, and he did so frequently throughout his twenty-year research career. Especially noteworthy were articles in Saturday Review, Hutchings Journal, the Medical World News, and Technology Review, his interview on the national television show “60 Minutes,” his statements on public health made to the US House of Representatives in 1967, 1987, and 1990, and his testimony in hearings in New York concerning the health impacts of high-voltage powerlines.

The cumulative effect of the novelty of his research and his practice of speaking publicly about its implications was the loss of his research funding from the National Institutes of Health and the Veterans Administration; Becker’s public activities brought unwanted controversy to both agencies. Following a public dispute with the president of the National Academy of Sciences regarding scientific bias in the evaluation of a public health issue, Becker was forced to retire.

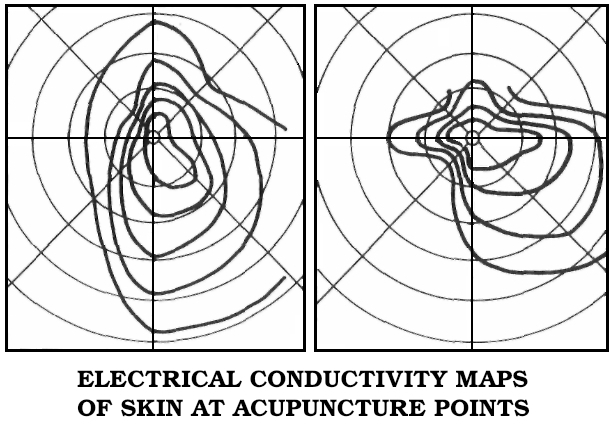

During Becker’s public involvement in the four-year powerline hearings, his grant renewal requests were denied, sometimes without explanation; a main NIH grant that had funded positions in his lab for over a decade was terminated, as was his grant to study acupuncture. Soon after he lost the grants, during an interview on “60 Minutes” in February 1977 regarding the Navy’s proposed Sanguine antenna, Becker suggested that the National Academy of Sciences committee then evaluating the safety of the antenna was biased against finding biological effects. The Academy president Philip Handler, who had selected the committee, called for Becker’s firing; Becker continued to function as a staff physician but lost his appointment as Medical Investigator, which had the effect of reducing his staff by half. In early 1979 the Veterans Administration closed his laboratory; with no capacity to continue his research, he retired. He was 56. In his preface to a 1985 book about the New York hearings and aftermath, he wrote that the book revealed not only the health hazard, but also “the hazards…of raising the issue”

In the years following his forced retirement, Becker wrote extensively about his research in articles, books, and public testimony, recounting its history, explaining its meaning, and providing what he viewed as a coherent basis for examining medical issues in general and the specific issue of electromagnetic health risks. He cofounded the Journal of Bioelectricity (subsequently Electromagnetic Medicine and Biology), gave the 1983 President’s Guest Address before the American Academy of Orthopedic Surgeons, and testified again in congressional hearings on health risks from electromagnetic technologies.

Becker articulated his views in four books. In Electromagnetism and Life, published in 1982, he argued that exposure to artificial environmental electromagnetic energy was a general biologic stressor and can produce functional changes in biological systems. Mechanisms of Growth Control, published in 1981, was the proceedings of an international conference on regeneration that he organized. Writing for a general audience in The Body Electric in 1985 and Cross Currents in 1990, Becker summarized his research and his views on science and medicine in historical perspective.

He patented a cell-modification process in which cells were dedifferentiated by ions from electrically positive silver electrodes; the modified cells were said to be capable of regenerating organs and tissues. An FDA-approved clinical study of his method was sponsored by the Sybron Corporation at the LSU Medical School in Shreveport to study the safety and efficacy of the method for treating osteomyelitis, but the Sybron product was not brought to market.

Copyright © 1985 by Roberto Becker, M.D., and Gary Selden All rights reserved, No part of this book may be reproduced or utilized in any form or by any means, electronic or mechanical, including photocopying, recording, or by any information storage or retrieval system, without permission in writing from the Publisher. Inquiries should be addressed to Permission Department, William Morrow and Company, 1350 Ave of the Americas, New York, NY 10019

Library of Congress Cataloging in Publication Data

Becker, Robert O.

The Body Electric.

Reprint. Originally publish: New York: Morrow. © 1985

Includes Index

1. Electromagnetism—Physiological effects

2. Electromagnetism in medicine. I Selden, Gary.

II Title.

QP82.2.E43B4 1987 591.19'127 86-25168

ISBN 0-588-06971-1 (pkb.)

Printed in the United States of America

26 27 28 29 30 31 32 33 34 35

BOOK DESIGN BY PATTY LOWY

|

PART 1: Growth and Regrowth |

Salamander: energy’s seed sleeping interred in the marrow …

—Octavio Paz

There is only one health, but diseases are many. Likewise, there appears to be one fundamental force that heals, although the myriad schools of medicine all have their favorite ways of cajoling it into action.

Our prevailing mythology denies the existence of any such generalized force in favor of thousands of little ones sitting on pharmacists’ shelves, each one potent against only a few ailments or even a part of one. This system often works fairly well, especially for treatment of bacterial diseases, but it’s no different in kind from earlier systems in which a specific saint or deity, presiding over a specific healing herb, had charge of each malady and each part of the body. Modern medicine didn’t spring full-blown from the heads of Pasteur and Lister a hundred years ago.

If we go back further, we find that most medical systems have combined such specifics with a direct, unitary appeal to the same vital principle in all illnesses. The inner force can be tapped in many ways, but all are variations of four main, overlapping patterns: faith healing, magic healing, psychic healing, and spontaneous healing. Although science derides all four, they sometimes seem to work as well for degenerative diseases and long-term healing as most of what Western medicine can offer.

Faith healing creates a trance of belief in both patient and practitioner, as the latter acts as an intercessor or conduit between the sick mortal and a presumed higher power. Since failures are usually ascribed to a lack of faith by the patient, this brand of medicine has always been a gold mine for charlatans. When bona fide, it seems to be an escalation of the placebo effect, which produces improvement in roughly one third of subjects who think they’re being treated but are actually being given dummy pills in tests of new drugs. Faith healing requires even more confidence from the patient, so the disbeliever probably can prevent a cure and settle for the poor satisfaction of “I told you so.” If even a few of these oft-attested cases are genuine, however, the healed one suddenly finds faith turned into certainty as the withered arm aches with unaccustomed sensation, like a starved animal waking from hibernation.

Magical healing shifts the emphasis from the patient’s faith to the doctor’s trained will and occult learning. The legend of Teta, an Egyptian magician from the time of Khufu (Cheops), builder of the Great Pyramid, can serve as an example. At the age of 110, Teta was summoned into the royal presence to demonstrate his ability to rejoin a severed head to its body, restoring life. Khufu ordered a prisoner beheaded, but Teta discreetly suggested that he would like to confine himself to laboratory animals for the moment. So a goose was decapitated. Its body was laid at one end of the hall, its head at the other. Teta repeatedly pronounced his words of power, and each time, the head and body twitched a little closer to each other, until finally the two sides of the cut met. They quickly fused, and the bird stood up and began cackling. Some consider the legendary miracles of Jesus part of the same ancient tradition, learned during Christ’s precocious childhood in Egypt.

Whether or not we believe in the literal truth of these particular accounts, over the years so many otherwise reliable witnesses have testified to healing “miracles” that it seems presumptuous to dismiss them all as fabrications. Based on the material presented in this book, I suggest Coleridge’s “willing suspension of disbelief” until we understand healing better. Shamans apparently once served at least some of their patients well, and still do where they survive on the fringes of the industrial world. Magical medicine suggests that our search for the healing power isn’t so much an exploration as an act of remembering something that was once intuitively ours, a form of recall in which the knowledge is passed on or awakened by initiation and apprenticeship to the man or woman of power.

Sometimes, however, the secret needn’t be revealed to be used. Many psychic healers have been studied, especially in the Soviet Union, whose gift is unconscious, unsought, and usually discovered by accident. One who demonstrated his talents in the West was Oskar Estebany. A Hungarian Army colonel in the mid-1930s, Estebany noticed that horses he groomed got their wind back and recovered from illnesses faster than those cared for by others. He observed and used his powers informally for years, until, forced to emigrate after the 1956 Hungarian revolution, he settled in Canada and came to the attention of Dr. Bernard Grad, a biologist at McGill University. Grad found that Estebany could accelerate the healing of measured skin wounds made on the backs of mice, as compared with controls. He didn’t let Estebany touch the animals, but only place his hands near their cages, because handling itself would have fostered healing. Estebany also speeded up the growth of barley plants and reactivated ultraviolet-damaged samples of the stomach enzyme trypsin in much the same way as a magnetic field, even though no magnetic field could be detected near his body with the instruments of that era.

The types of healing we’ve considered so far have trance and touch as common factors, but some modes don’t even require a healer. The spontaneous miracles at Lourdes and other religious shrines require only a vision, fervent prayer, perhaps a momentary connection with a holy relic, and intense concentration on the diseased organ or limb. Other reports suggest that only the intense concentration is needed, the rest being aids to that end. When Diomedes, in the fifth book of the Iliad, dislocates Aeneas’ hip with a rock, Apollo takes the Trojan hero to a temple of healing and restores full use of his leg within minutes. Hector later receives the same treatment after a rock in the chest fells him. We could dismiss these accounts as the hyperbole of a great poet if Homer weren’t so realistic in other battlefield details, and if we didn’t have similar accounts of soldiers in recent wars recovering from “mortal” wounds or fighting on while oblivious to injuries that would normally cause excruciating pain. British Army surgeon Lieutenant Colonel H. K. Beecher described 225 such cases in print after World War II. One soldier at Anzio in 1943, who’d had eight ribs severed near the spine by shrapnel, with punctures of the kidney and lung, who was turning blue and near death, kept trying to get off his litter because he thought he was lying on his rifle. His bleeding abated, his color returned, and the massive wound began to heal after no treatment but an insignificant dose of sodium amytal, a weak sedative given him because there was no morphine.

These occasional prodigies of battlefield stress strongly resemble the ability of yogis to control pain, stop bleeding, and speedily heal wounds with their will alone. Biofeedback research at the Menninger Foundation and elsewhere has shown that some of this same power can be tapped in people with no yogic training. That the will can be applied to the body’s ills has also been shown by Norman Cousins in his resolute conquest by laugh therapy of ankylosing spondylitis, a crippling disease in which the spinal discs and ligaments solidify like bone, and by some similar successes by users of visualization techniques to focus the mind against cancer.

Unfortunately, no approach is a sure thing. In our ignorance, the common denominator of all healing—even the chemical cures we profess to understand—remains its mysteriousness. Its unpredictability has bedeviled doctors throughout history. Physicians can offer no reason why one patient will respond to a tiny dose of a medicine that has no effect on another patient in ten times the amount, or why some cancers go into remission while others grow relentlessly unto death.

By whatever means, if the energy is successfully focused, it results in a marvelous transformation. What seemed like an inexorable decline suddenly reverses itself. Healing can almost be defined as a miracle. Instant regrowth of damaged parts and invincibility against disease are commonplaces of the divine world. They continually appear even in myths that have nothing to do with the theme of healing itself. Dead Vikings went to a realm where they could savor the joys of killing all day long, knowing their wounds would heal in time for the next day’s mayhem. Prometheus’ endlessly regrowing liver was only a clever torture arranged by Zeus so that the eagle sent as punishment for the god’s delivery of fire to mankind could feast on his most vital organ forever—although the tale also suggests that the prehistoric Greeks knew something of the liver’s ability to enlarge in compensation for damage to it.

The Hydra was adept at these offhand wonders, too. This was the monster Hercules had to kill as his second chore for King Eurystheus. The beast had somewhere between seven and a hundred heads, and each time Hercules cut one off, two new ones sprouted in its place—until the hero got the idea of having his nephew Iolaus cauterize each neck as soon as the head hit the ground.

In the eighteenth century the Hydra’s name was given to a tiny aquatic animal having seven to twelve “heads,” or tentacles, on a hollow, stalk-like body, because this creature can regenerate. The mythic Hydra remains a symbol of that ability, possessed to some degree by most animals, including us.

Generation, life’s normal transformation from seed to adult, would seem as unearthly as regeneration if it were not so commonplace. We see the same kinds of changes in each. The Greek hero Cadmus grows an army by sowing the teeth of a dragon he has killed. The primeval serpent makes love to the World Egg, which hatches all the creatures of the earth. God makes Adam from Eve’s rib, or vice versa in the later version. The Word of God commands life to unfold. The genetic words encoded in DNA spell out the unfolding. At successive but still limited levels of understanding, each of these beliefs tries to account for the beautifully bizarre metamorphosis. And if some savage told us of a magical worm that built a little windowless house, slept there a season, then one day emerged and flew away as a jeweled bird, we’d laugh at such superstition if we’d never seen a butterfly.

The healer’s job has always been to release something not understood, to remove obstructions (demons, germs, despair) between the sick patient and the force of life driving obscurely toward wholeness. The means may be direct—the psychic methods mentioned above—or indirect: Herbs can be used to stimulate recovery; this tradition extends from prehistoric wisewomen through the Greek herbal of Dioscorides and those of Renaissance Europe, to the prevailing drug therapies of the present. Fasting, controlled nutrition, and regulation of living habits to avoid stress can be used to coax the latent healing force from the sick body; we can trace this approach back from today’s naturopaths to Galen and Hippocrates. Attendants at the healing temples of ancient Greece and Egypt worked to foster a dream in the patient that would either start the curative process in sleep or tell what must be done on awakening. This method has gone out of style, but it must have worked fairly well, for the temples were filled with plaques inscribed by grateful patrons who’d recovered. In fact, this mode was so esteemed that Aesculapius, the legendary doctor who originated it, was said to have been given two vials filled with the blood of Medusa, the snaky-haired witch-queen killed by Perseus. Blood from her left side restored life, while that from her right took it away—and that’s as succinct a description of the tricky art of medicine as we’re likely to find.

The more I consider the origins of medicine, the more I’m convinced that all true physicians seek the same thing. The gulf between folk therapy and our own stainless-steel version is illusory. Western medicine springs from the same roots and, in the final analysis, acts through the same little-understood forces as its country cousins. Our doctors ignore this kinship at their—and worse, their patients’—peril. All worthwhile medical research and every medicine man’s intuition is part of the same quest for knowledge of the same elusive healing energy.

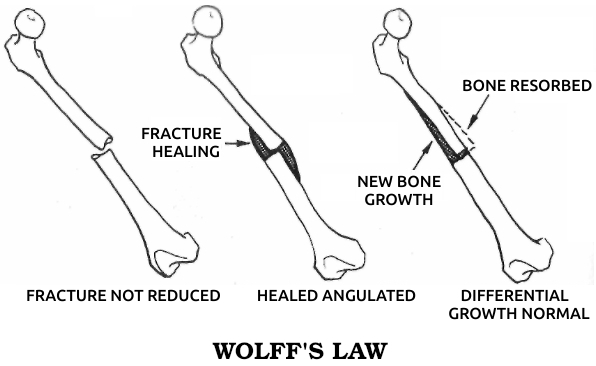

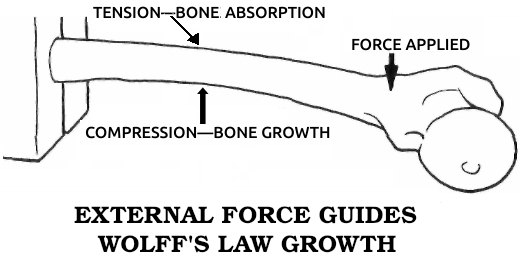

As an orthopedic surgeon, I often pondered one particular breakdown of that energy, my specialty’s major unsolved problem—nonunion of fractures. Normally a broken bone will begin to grow together in a few weeks if the ends are held close to each other without movement. Occasionally, however, a bone will refuse to knit despite a year or more of casts and surgery. This is a disaster for the patient and a bitter defeat for the doctor, who must amputate the arm or leg and fit a prosthetic substitute.

Throughout this century, most biologists have been sure only chemical processes were involved in growth and healing. As a result, most work on nonunions has concentrated on calcium metabolism and hormone relationships. Surgeons have also “freshened,” or scraped, the fracture surfaces and devised ever more complicated plates and screws to hold the bone ends rigidly in place. These approaches seemed superficial to me. I doubted that we would ever understand the failure to heal unless we truly understood healing itself.

|

When I began my research career in 1958, we already knew a lot about the logistics of bone mending. It seemed to involve two separate processes, one of which looked altogether different from healing elsewhere in the human body. But we lacked any idea of what set these processes in motion and controlled them to produce a bone bridge across the break.

Every bone is wrapped in a layer of tough, fibrous collagen, a protein that’s a major ingredient of bone itself and also forms the connective tissue or “glue” that fastens all our cells to each other. Underneath the collagen envelope are the cells that produce it, right next to the bone; together the two layers form the periosteum. When a bone breaks, these periosteal cells divide in a particular way. One of the daughter cells stays where it is, while the other one migrates into the blood clot surrounding the fracture and changes into a closely related type, an osteoblast, or bone-forming cell. These new osteoblasts build a swollen ring of bone, called a callus, around the break.

Another repair operation is going on inside the bone, in its hollow center, the medullary cavity. In childhood the marrow in this cavity actively produces red and white blood cells, while in adulthood most of the marrow turns to fat. Some active marrow cells remain, however, in the porous convolutions of the inner surface. Around the break a new tissue forms from the marrow cells, most readily in children and young animals. This new tissue is unspecialized, and the marrow cells seem to form it not by increasing their rate of division, as in the callus-forming periosteal cells, but by reverting to a primitive, neo-embryonic state. The unspecialized former marrow cells then change into a type of primitive cartilage cells, then into mature cartilage cells, and finally into new bone cells to help heal the break from inside. Under a microscope, the changes seen in cells from this internal healing area, especially from children a week or two after the bone was broken, seem incredibly chaotic, and they look frighteningly similar to highly malignant bone-cancer cells. But in most cases their transformations are under control, and the bone heals.

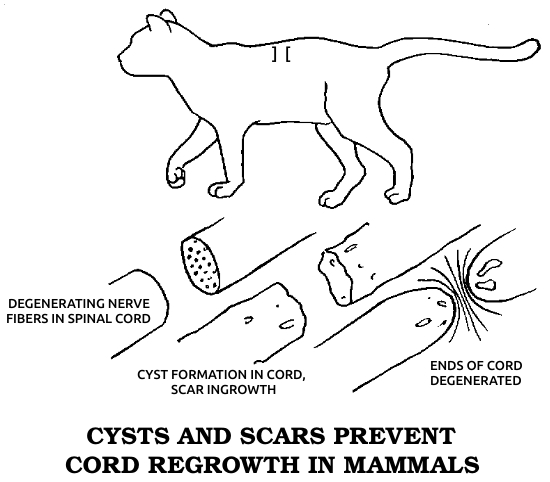

Dr. Marshall Urist, one of the great researchers in orthopedics, was to conclude in the early 1960s that this second type of bone healing is an evolutionary throwback, the only kind of regeneration that humans share with all other vertebrates. Regeneration in this sense means the regrowth of a complex body part, consisting of several different kinds of cells, in a fashion resembling the original growth of the same part in the embryo, in which the necessary cells differentiate from simpler cells or even from seemingly unrelated types. This process, which I’ll call true regeneration, must be distinguished from two other forms of healing. One, sometimes considered a variety of regeneration, is physiological repair, in which small wounds and everyday wear within a single tissue are made good by nearby cells of the same type, which merely proliferate to close the gap. The other kind of healing occurs when a wound is too big for single-tissue repair but the animal lacks the true regenerative competence to restore the damaged part. In this case the injury is simply patched over as well as possible with collagen fibers, forming a scar. Since true regeneration is most closely related to embryonic development and is generally strongest in simple animals, it may be considered the most fundamental mode of healing.

Nonunions failed to knit, I reasoned, because they were missing something that triggered and controlled normal healing. I’d already begun to wonder if the inner area of bone mending might be a vestige of true regeneration. If so, it would likely show the control process in a clearer or more basic form than the other two kinds of healing. I figured I stood little chance of isolating a clue to it in the multilevel turmoil of a broken bone itself, so I resolved to study regeneration alone, as it occurred in other animals.

Regeneration happens all the time in the plant kingdom. Certainly this knowledge was acquired very early in mankind’s history. Besides locking up their future generations in the mysterious seed, many plants, such as grapevines, could form a new plant from a single part of the old. Some classical authors had an inkling of animal regeneration—Aristotle mentions that the eyes of very young swallows recover from injury, and Pliny notes that lost “tails” of octopi and lizards regrow. However, regrowth was thought to be almost exclusively a plant prerogative.

The great French scientist René Antoine Ferchault de Réaumur made the first scientific description of animal regeneration in 1712. Réaumur devoted all his life to the study of “insects,” which at that time meant all invertebrates, everything that was obviously “lower” than lizards, frogs, and fish. In studies of crayfish, lobsters, and crabs, Réaumur proved the claims of Breton fishermen that these animals could regrow lost legs. He kept crayfish in the live-bait well of a fishing boat, removing a claw from each and observing that the amputated extremity reappeared in full anatomical detail. A tiny replica of the limb took shape inside the shell; when the shell was discarded at the next molting season, the new limb unfolded and grew to full size.

Réaumur was one of the scientific geniuses of his time. Elected to the Royal Academy of Sciences when only twenty-four, he went on to invent tinned steel, Réaumur porcelain (an opaque white glass), imitation pearls, better ways of forging iron, egg incubators, and the Réaumur thermometer, which is still used in France. At the age of sixty-nine he isolated gastric juice from the stomach and described its digestive function. Despite his other accomplishments, “insects” were his life’s love (he never married), and he probably was the first to conceive of the vast, diverse population of life-forms that this term encompassed. He rediscovered the ancient royal purple dye from Murex trunculus (a marine mollusk), and his work on spinning a fragile, filmy silk from spider webs was translated into Manchu for the Chinese emperor. He was the first to elucidate the social life and sexually divided caste system of bees. Due to his eclipse in later years by court-supported scientists who valued “common sense” over observation, Réaumur’s exhaustive study of ants wasn’t published until 1926. In the interim it had taken several generations of formicologists to cover the same ground, including the description of winged ants copulating in flight and proof that they aren’t a separate species but the sexual form of wingless ants. In 1734 he published the first of six volumes of his Natural History of Insects, a milestone in biology.

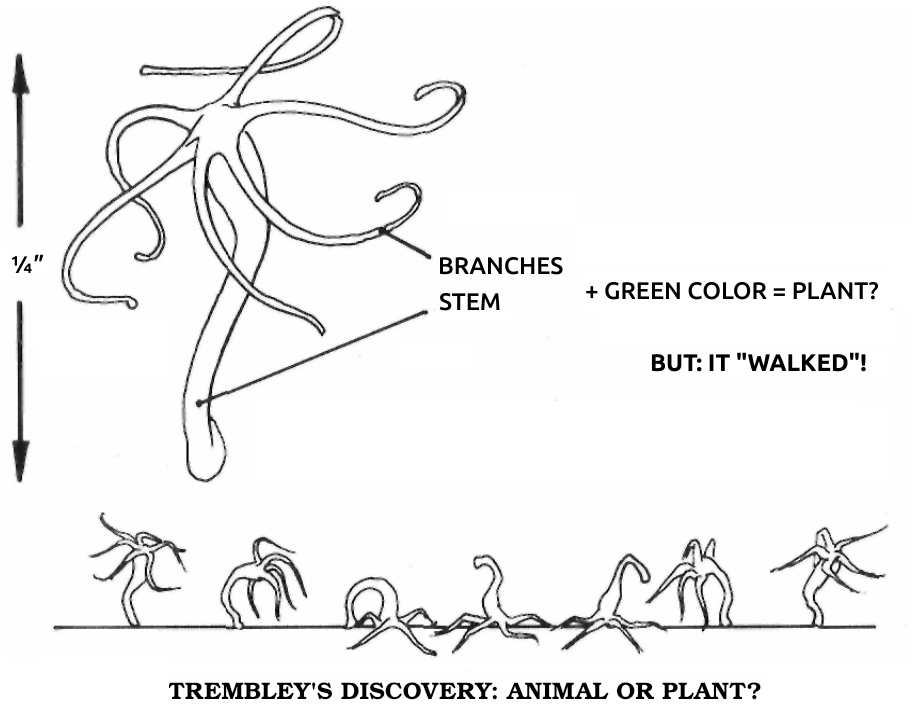

Réaumur made so many contributions to science that his study of regeneration was overlooked for decades. At that time no one really cared what strange things these unimportant animals did. However, all of the master’s work was well known to a younger naturalist, Abraham Trembley of Geneva, who supported himself, as did many educated men of that time, by serving as a private tutor for sons of wealthy families. In 1740, while so employed at an estate near The Hague, in Holland, Trembley was examining with a hand lens the small animals living in freshwater ditches and ponds. Many had been described by Réaumur, but Trembley chanced upon an odd new one. It was no more than a quarter of an inch long and faintly resembled a squid, having a cylindrical body topped with a crown of tentacles. However, it was a startling green color. To Trembley, green meant vegetation, but if this was a plant, it was a mighty peculiar one. When Trembley agitated the water in its dish, the tentacles contracted and the body shrank down to a nubbin, only to reexpand after a period of quiet. Strangest of all, he saw that the creature “walked” by somersaulting end over end.

Since they had the power of locomotion, Trembley would have assumed that these creatures were animals and moved on to other observations, if he hadn’t chanced to find a species colored green by symbiotic algae. To settle the animal-plant question, he decided to cut some in half. If they regrew, they must be plants with the unusual ability to walk, while if they couldn’t regenerate, they must be green animals.

|

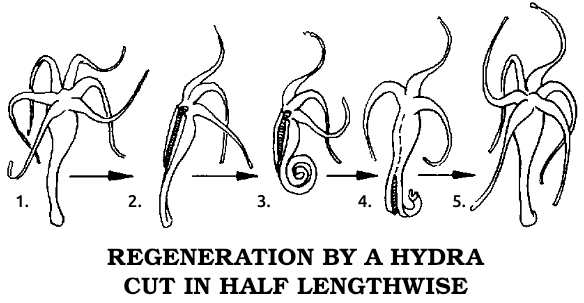

Trembley soon entered into a world that exceeded his wildest dreams. He divided the polyps, as he first called them, in the middle of their stalks. He then had two short pieces of stalk, one with attached tentacles, each of which contracted down to a tiny dot. Patiently watching, Trembley saw the two pieces later expand. The tentacle portion began to move normally, as though it were a complete organism. The other portion lay inert and apparently dead. Something must have made Trembley continue the experiment, for he watched this motionless object for nine days, during which nothing happened. He then noted that the cut end had sprouted three little “horns,” and within a few more days the complete crown of tentacles had been restored. Trembley now had two complete polyps as a result of cutting one in half! However, even though they regenerated, more observations convinced Trembley that the creatures were really animals. Not only did they move and walk, but their arms captured tiny water fleas and moved them to the “mouth,” located in the center of the ring of tentacles, which promptly swallowed the prey.

Trembley, then only thirty-one, decided to make sure he was right by having the great Réaumur confirm his findings before he published them and possibly made a fool of himself. He sent specimens and detailed notes to Réaumur, who confirmed that this was an animal with amazing powers of regeneration. Then he immediately read Trembley’s letters and showed his specimens to an astounded Royal Academy early in 1741. The official report called Trembley’s polyp more marvelous than the phoenix or the mythical serpent that could join together after being cut in two, for these legendary animals could only reconstitute themselves, while the polyp could make a duplicate. Years later Réaumur was still thunderstruck. As he wrote in Volume 6 of his series on insects, “This is a fact that I cannot accustom myself to seeing, after having seen and re-seen it hundreds of times.”

|

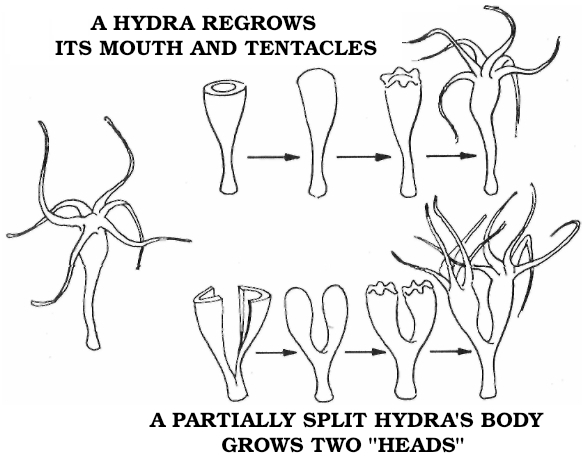

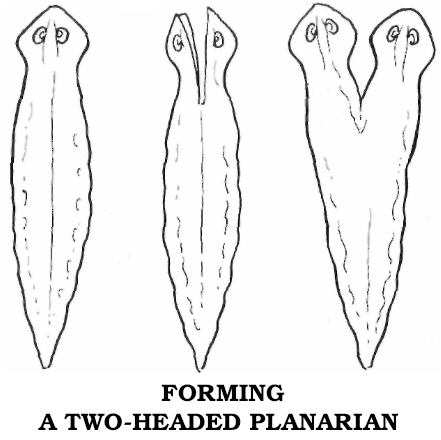

This was just the beginning, however. Trembley’s polyps performed even more wondrous feats. When cut lengthwise, each half of the stalk healed over without a scar and proceeded to regrow the missing tentacles. Trembley minced some polyps into as many pieces as he could manage, finding that a complete animal would regrow from each piece, as long as it included a remnant of the central stalk. In one instance he quartered one of the creatures, then cut each resulting polyp into three or four pieces, until he had made fifty animals from one.

His most famous experiment was the one that led him to name his polyp “hydra.” He found that by splitting the head lengthwise, leaving the stalk intact, he could produce one animal with two crowns of tentacles. By continuing the process he was able to get one animal with seven heads. When Trembley lopped them off, each one regrew, just like the mythical beast’s. But nature went legend one better: Each severed head went on to form a complete new animal as well.

Such experiments provided our first proof that entire animals can regenerate, and Trembley went on to observe that hydras could reproduce by simple budding, a small animal appearing on the side of the stalk and growing to full size. The implications of these discoveries were so revolutionary that Trembley delayed publishing a full account of his work until he’d been prodded by Réaumur and preceded in print by several others. The sharp division between plant and animal suddenly grew blurred, suggesting a common origin with some kind of evolution; basic assumptions about life had to be rethought. As a result, Trembley’s observations weren’t enthusiastically embraced by all. They inflamed several old arguments and offended many of the old guard. In this respect Trembley’s mentor Réaumur was a most unusual scientist for his time, and indeed for all time. Despite his prominence, he was ready to espouse radically new ideas and, most important, he didn’t steal the ideas of others, an all too common failing among scientists.

|

A furious debate was raging at the time of Trembley’s announcement. It concerned the origin of the individual—how the chicken came from the egg, for example. When scientists examined the newly laid egg, there wasn’t much there except two liquids, the white and the yolk, neither of which had any discernible structure, let alone anything resembling a chicken.

There were two opposite theories. The older one, derived from Aristotle, held that each animal in all its complexity developed from simple organic matter by a process called epigenesis, akin to our modern concept of cell differentiation. Unfortunately, Trembley himself was the first person to witness cell division under the microscope, and he didn’t realize that it was the normal process by which all cells multiplied. In an era knowing nothing of genes and so little of cells, yet beginning to insist on logical, scientific explanations, epigenesis seemed more and more absurd. What could possibly transform the gelatin of eggs and sperm into a frog or a human, without invoking that tired old deus ex machina the spirit, or inexplicable spark of life—unless the frog or person already existed in miniature inside the generative slime and merely grew in the course of development?

The latter idea, called preformation, had been ascendant for at least fifty years. It was so widely accepted that when the early microscopists studied drops of semen, they dutifully reported a little man, called a homunculus, encased in the head of each sperm—a fine example of science’s capacity for self-delusion. Even Réaumur, when he failed to find tiny butterfly wings inside caterpillars, assumed they were there but were too small to be seen. Only a few months before Trembley began slicing hydras, his cousin, Genevan naturalist Charles Bonnet, had proven (in an experiment suggested by the omnipresent Réaumur) that female aphids usually reproduced parthenogenetically (without mating). To Bonnet this demonstrated that the tiny adult resided in the egg, and he became the leader of the ovist preformationists

The hydra’s regeneration, and similar powers in starfish, sea anemones, and worms, put the scientific establishment on the defensive. Réaumur had long ago realized that preformation couldn’t explain how a baby inherited traits from both father and mother. The notion of two homunculi fusing into one seed seemed farfetched. His regrowing crayfish claws showed that each leg would have to contain little preformed legs scattered throughout. And since a regenerated leg could be lost and replaced many times, the proto-legs would have to be very numerous, yet no one had ever found any.

Regeneration therefore suggested some form of epigenesis—perhaps without a soul, however, for the hydra’s anima, if it existed, was divisible along with the body and indistinguishable from it. It seemed as though some forms of matter itself possessed the spark of life. For lack of knowledge of cells, let alone chromosomes and genes, the epigeneticists were unable to prove their case. Each side could only point out the other’s inconsistencies, and politics gave preformationism the edge.

No wonder nonscientists often grew impatient of the whole argument. Oliver Goldsmith and Tobias Smollett mocked the naturalists for missing nature’s grandeur in their myopic fascination with “muck-flies.” Henry Fielding lampooned the discussion in a skit about the regeneration of money. Diderot thought of hydras as composite animals, like swarms of bees, in which each particle had a vital spark of its own, and lightheartedly suggested there might be “human polyps” on Jupiter and Saturn. Voltaire was derisively skeptical of attempts to infer the nature of the soul, animal or human, from these experiments. Referring in 1768 to the regenerating heads of snails, he asked, “What happens to its sensorium, its memory, its store of ideas, its soul, when its head has been cut off? How does all this come back? A soul that is reborn is an extremely curious phenomenon.” Profoundly disturbed by the whole affair, for a long time he simply refused to believe in animal regeneration, calling the hydra “a kind of small rush.”

It was no longer possible to doubt the discovery after the work of Lazzaro Spallanzani, an Italian priest for whom science was a full-time hobby. In a career spanning the second half of the eighteenth century, Spallanzani discovered the reversal of plant transpiration between light and darkness, and advanced our knowledge of digestion, volcanoes, blood circulation, and the senses of bats, but his most important work concerned regrowth. In twenty years of meticulous observation, he studied regeneration in worms, slugs, snails, salamanders, and tadpoles. He set new standards for thoroughness, often dissecting the amputated parts to make sure he’d removed them whole, then dissecting the replacements a few months later to confirm that all the parts had been restored.

----------------------------  |

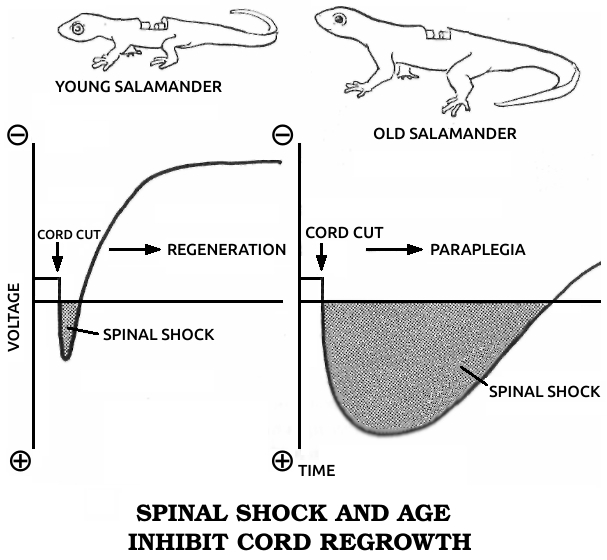

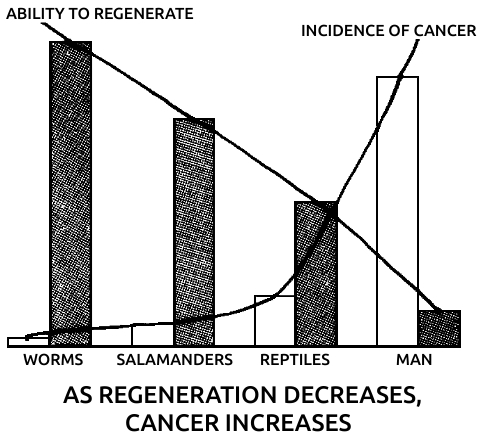

Spallanzani’s most important contribution to science was his discovery of the regenerative abilities of the salamander. It could replace its tail and limbs, all at once if need be. A young one performed this feat for Spallanzani six times in three months. He later found that the salamander could also replace its jaw and the lenses of its eyes, and then went on to establish two general rules of regeneration: Simple animals can regenerate more fully than complex ones, or, in modern terms, the ability to regenerate declines as one moves up the evolutionary scale. (The salamander is the main exception.) In ontogenetic parallel, if a species can regenerate, younger individuals do it better than older ones.

This early regeneration research, Spallanzani’s in particular, was a benchmark in modern biology. Gentlemanly observations buttressed by “common sense” gave way to a more rigorous kind of examination in which nothing was taken for granted. It had been “known” for perhaps ten thousand years that plants could regenerate and animals couldn’t. To many zoologists, even twenty years after Trembley’s initial discovery, the few known exceptions only proved the rule, for octopi, crayfish, hydras, worms, and snails seemed so unlike humans or the familiar mammals that they hardly counted. The lizard, the only other vertebrate regenerator then known, could manage no more than an imperfect tail. But the salamander—here was an animal we could relate to! This was no worm or snail or microscopic dot, but a four-limbed, two-eyed vertebrate that could walk and swim. While its legendary ability to withstand fire had been disproven, its body was big enough and its anatomy similar enough to ours to be taken seriously. Scientists could no longer assume that the underlying process had nothing to do with us. In fact, the questions with which Spallanzani ended his first report on the salamander have haunted biologists ever since: “Is it to be hoped that [higher animals] may acquire [the same power] by some useful dispositions? and should the flattering expectation of obtaining this advantage for ourselves be considered entirely as chimerical?”

Regeneration was largely forgotten for a century. Spallanzani had been so thorough that little else could be learned about it with the techniques of the time. Moreover, although his work strongly supported epigenesis, its impact was lost because the whole debate was swallowed up in the much larger philosophical conflict between vitalism and mechanism. Since biology includes the study of our own essence, it’s the most emotional science, and it has been the battleground for these two points of view throughout its history. Briefly, the vitalists believed in a spirit, called the anima or élan vital, that made living things fundamentally different from other substances. The mechanists believed that life could ultimately be understood in terms of the same physical and chemical laws that governed nonliving matter, and that only ignorance of these forces led people to invoke such hokum as a spirit. We’ll take up these issues in more detail later, but for now we need only note that the vitalists favored epigenesis, viewed as an imposition of order on the chaos of the egg by some intangible “vital” force. The mechanists favored preformation. Since science insisted increasingly on material explanations for everything, epigenesis lost out despite the evidence of regeneration.

Mechanism dominated biology more and more, but some problems remained. The main one was the absence of the little man in the sperm. Advances in the power and resolution of microscopes had clearly shown that no one was there. Biologists were faced with the generative slime again, featureless goo from which, slowly and magically, an organism appeared.

After 1850, biology began to break up into various specialties. Embryology, the study of development, was named and promoted by Darwin himself, who hoped (in vain) that it would reveal a precise history of evolution (phylogeny) recapitulated in the growth process (ontogeny). In the 1880s, embryology matured as an experimental science under the leadership of two Germans, Wilhelm Roux and August Weismann. Roux studied the stages of embryonic growth in a very restricted, mechanistic way that revealed itself even in the formal Germanic title, Entwicklungsmechanik (“developmental mechanics”), that he applied to the whole field. Weismann, however, was more interested in how inheritance passed the instructions for embryonic form from one generation to the next. One phenomenon—mitosis, or cell division—was basic to both transactions. No matter how embryos grew and hereditary traits were transferred, both processes had to be accomplished by cellular actions.

Although we’re taught in high school that Robert Hooke discovered the cell in 1665, he really discovered that cork was full of microscopic holes, which he called cells because they looked like little rooms. The idea that they were the basic structural units of all living things came from Theodor Schwann, who proposed this cell theory in 1838. However, even at that late date, he didn’t have a clear idea of the origin of cells. Mitosis was unknown to him, and he wasn’t too sure of the distinction between plants and animals. His theory wasn’t fully accepted until two other German biologists, F. A. Schneider and Otto Bütschli, reintroduced Schwann’s concept and described mitosis in 1873.

Observations of embryogenesis soon confirmed its cellular basis. The fertilized egg was exactly that, a seemingly unstructured single cell. Embryonic growth occurred when the fertilized egg divided into two other cells, which promptly divided again. Their progeny then divided, and so on. As they proliferated, the cells also differentiated; that is, they began to show specific characteristics of muscle, cartilage, nerve, and so forth. The creature that resulted obviously had several increasingly complex levels of organization; however, Roux and Weismann had no alternative but to concentrate on the lowest one, the cell, and try to imagine how the inherited material worked at that level.

Weismann proposed a theory of “determiners,” specific chemical structures coded for each cell type. The fertilized egg contained all the determiners, both in type and in number, needed to produce every cell in the body. As cell division proceeded, the daughter cells each received half of the previous stock of determiners, until in the adult each cell possessed only one. Muscle cells contained only the muscle determiner, nerve cells only the one for nerves, and so on. This meant that once a cell’s function had been fixed, it could never be anything but that one kind of cell.

In one of his first experiments, published in 1888, Roux obtained powerful support for this concept. He took fertilized frog eggs, which were large and easy to observe, and waited until the first cell division had occurred. He then separated the two cells of this incipient embryo. According to the theory, each cell contained enough determiners to make half an embryo, and that was exactly what Roux got—two half-embryos. It was hard to argue with such a clear-cut result, and the determiner theory was widely accepted. Its triumph was a climactic victory for the mechanistic concept of life, as well.

One of vitalism’s last gasps came from the work of another German embryologist, Hans Driesch. Initially a firm believer in Entwicklungsmechanik, Driesch later found its concepts deficient in the face of life’s continued mysteries. For example, using sea urchin eggs, he repeated Roux’s famous experiment and obtained a whole organism instead of a half. Many other experiments convinced Driesch that life had some special innate drive, a process that went against known physical laws. Drawing on the ancient Greek idea of the anima, he proposed a non-material, vital factor that he called entelechy. The beginning of the twentieth century wasn’t a propitious time for such an idea, however, and it wasn’t popular.

|

|

----------------------------

----------------------------

----------------------------

----------------------------  |

As the nineteenth century drew to a close and the embryologists continued to struggle with the problems of inheritance, they found they still needed a substitute for the homunculus. Weismann’s determiners worked fine for embryonic growth, but regeneration was a glaring exception, and one that didn’t prove the rule. The original theory had no provision for a limited replay of growth to replace a part lost after development was finished. Oddly enough, the solution had already been provided by a man almost totally forgotten today, Theodor Heinrich Boveri.

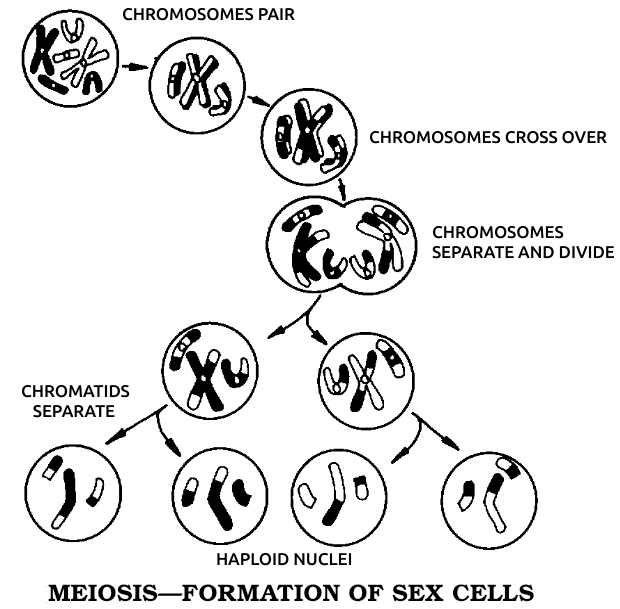

Working at the University of Munich in the 1880s, Boveri discovered almost every detail of cell division, including the chromosomes. Not until the invention of the electron microscope did anyone add materially to his original descriptions. Boveri found that all nonsexual cells of any one species contained the same number of chromosomes. As growth proceeded by mitosis, these chromosomes split lengthwise to make two of each so that each daughter cell then had the same number of chromosomes. The egg and sperm, dividing by a special process called meiosis, wound up with exactly half that number, so that the fertilized egg would start out with a full complement, half from the father and half from the mother. He reached the obvious conclusions that the chromosomes transmitted heredity, and that each one could exchange smaller units of itself with its counterpart from the other parent.

At first this idea wasn’t well received. It was strenuously opposed by Thomas Hunt Morgan, a respected embryologist at Columbia University and the first American participant in this saga. Later, when Morgan found that the results of his own experiments agreed with Boveri’s, he went on to describe chromosome structure in more detail, charting specific positions, which he called genes, for inherited characteristics. Thus the science of genetics was born, and Morgan received the Nobel Prize in 1933. So much for Boveri.

Although Morgan was most famous for his genetics research on fruit flies, he got his start by studying salamander limb regeneration, about which he made a crucial observation. He found that the new limb was preceded by a mass of cells that appeared on the stump and resembled the unspecialized cell mass of the early embryo. He called this structure the blastema and later concluded that the problem of how a regenerated limb formed was identical to the problem of how an embryo developed from the egg.

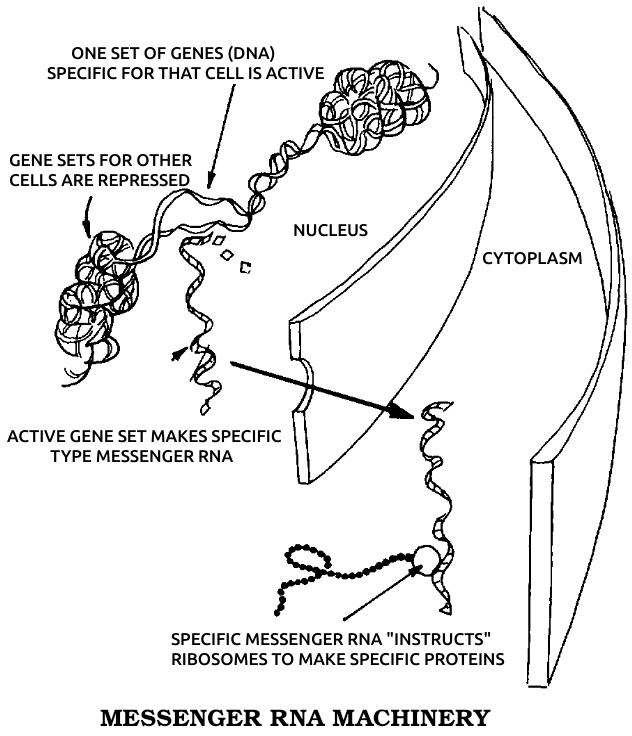

Morgan postulated that the chromosomes and genes contained not only the inheritable characteristics but also the code for cell differentiation. A muscle cell, for example, would be formed when the group of genes specifying muscle were in action. This insight led directly to our modern understanding of the process: In the earliest stages of the embryo, every gene on every chromosome is active and available to every cell. As the organism develops, the cells form three rudimentary tissue layers—the endoderm, which develops into the glands and viscera; the mesoderm, which becomes the muscles, bones, and circulatory system; and the ectoderm, which gives rise to the skin, sense organs, and nervous system. Some of the genes are already being turned off, or repressed, at this stage. As the cells differentiate into mature tissues, only one specific set of genes stays switched on in each kind. Each set can make only certain types of messenger ribonucleic acid (mRNA), the “executive secretary” chemical by means of which DNA “instructs” the ribosomes (the cell’s protein-factory organelles) to make the particular proteins that distinguish a nerve cell, for example, from a muscle or cartilage cell.

There’s a superficial similarity between this genetic mechanism and the old determiner theory. The crucial difference is that, instead of determiners being segregated until only one remains in each cell, the genes are repressed until only one set remains active in each cell. However, the entire genetic blueprint is carried by every cell nucleus.